One of the first and most popular user interfaces for Stable Diffusion. It's a feature-rich web UI and offers extensive customization options, support for various models and extensions, and a user-friendly interface. Automatic1111 with Stable Diffusion is popular among both beginners and advanced users.

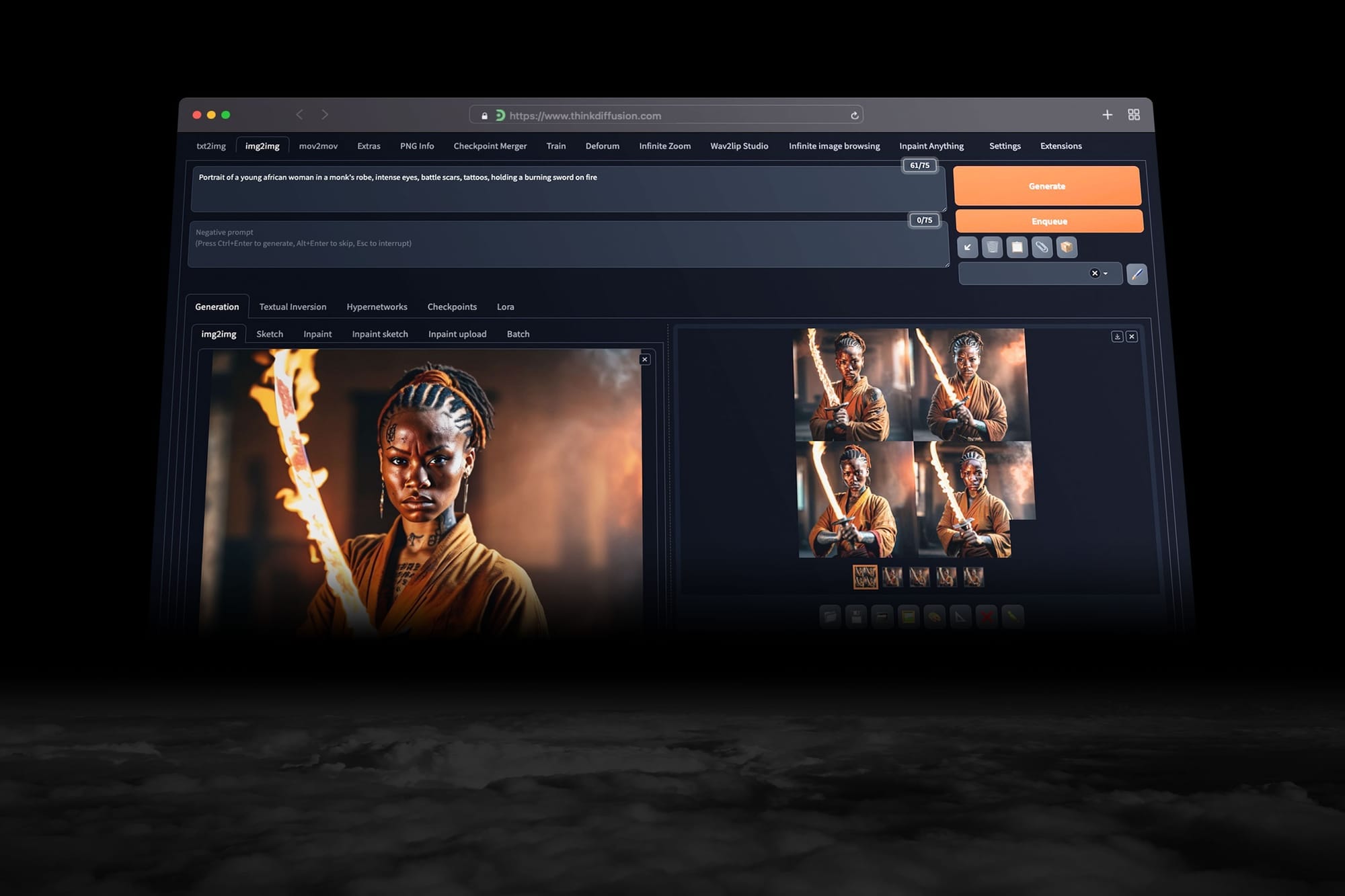

Automatic1111 stable diffusion is the gold standard UI for accessing everything Stable Diffusion has to offer. With Automatic1111 on ThinkDiffusion, you enjoy complete access as if it's installed locally, but without the hassle of managing it yourself.

Powerful Auto1111 Use-Cases

1. Image Generation

Image generation involves creating entirely new images based on descriptive text prompts. This is the core functionality of Automatic1111, allowing users to produce diverse visuals in a variety of styles with just a few words.

- Text-to-Image Generation: Create high-quality images from text prompts.

- Style Transfer: Apply specific artistic or photographic styles to generated images.

- Variations of an Image: Generate multiple variations of a base image.

2. Image Manipulation

Image manipulation focuses on modifying existing images, either by editing parts, extending boundaries, or applying transformations. This is ideal for enhancing or altering visuals while maintaining control over specific areas.

- Inpainting: Edit specific parts of an image while preserving the context (e.g., removing objects or replacing details). It's used for selective editing, object removal, or adding new elements to existing images. Inpainting is a key tool for precise image manipulation with AI.

- Outpainting: Expand the edges of an image while maintaining the original style and composition.

- Image-to-Image Transformation: Modify existing images by applying prompt-based transformations or style changes. Sometimes referred to as Img2Img or Image2Image, it's a technique that uses an existing image as a starting point for generation. It allows for guided modifications, style transfers, or variations of an original image. Img2img is fairly simple in comparison to more advanced tools like ControlNet.

- Face Restoration: Enhance facial details in images using built-in tools like GFPGAN or CodeFormer.

3. Animation and Video

This involves applying Stable Diffusion's capabilities to video and animation workflows, enabling creative transformations of frames or the generation of entirely new sequences.

- Frame Interpolation: Create smooth transitions between frames for animations or video.

- Video-to-Video: Apply Stable Diffusion effects and styles to video frames.

- Prompt-Based Animation: Generate animations by transitioning between prompts.

4. Customization and Fine-Tuning

Customization allows users to tailor Stable Diffusion models to specific needs or preferences. Fine-tuning tools like LoRA and Textual Inversion enable creating highly specialized outputs.

- LoRA (Low-Rank Adaptation) Training: Use LoRA models to specialize Stable Diffusion for specific styles or subjects.

- Custom Models: Load and use community-trained models for specific themes or styles (e.g., anime, realism, fantasy).

- Textual Inversion: Train and use embeddings to create or refine models for unique concepts, styles, or people.

- Hypernetworks: Apply pre-trained hypernetworks for more control over the output style.

5. Creative Design

This use case focuses on leveraging AI to assist in designing visual assets, such as concept art, logos, or fashion sketches. It’s ideal for professionals in creative industries.

- Concept Art Creation: Generate concept art for video games, films, and more.

- Logo and Branding Design: Create logos or branding elements based on text prompts.

- Fashion Design: Generate clothing designs or explore patterns for the fashion industry.

- Storyboarding: Create storyboards for films or advertisements with scene-based prompts.

6. AI-Assisted Workflows

AI-assisted workflows streamline repetitive or complex tasks like batch processing, upscaling, or refining images. These features enhance productivity and efficiency.

- Batch Processing: Automate the creation or modification of multiple images in a single operation.

- Upscaling: Improve image resolution with tools like ESRGAN or Real-ESRGAN.

- Negative Prompts: Fine-tune results by specifying elements to exclude.

7. Personalization

Personalization involves creating visuals tailored to specific requirements, such as custom characters, portraits, or unique interior designs.

- Character Creation: Design characters for games, books, or art projects.

- Portrait Creation: Generate realistic or stylized portraits based on descriptions.

- Interior Design: Visualize interiors or architecture based on descriptive prompts.

8. Education and Experimentation

These use cases support learning and experimentation with generative AI, making it a valuable tool for educators, students, and researchers.

- Teaching Tool: Educate students on AI-generated art and its applications.

- Prompt Engineering Practice: Experiment with crafting prompts to understand how input affects outputs.

- AI Research: Explore novel applications of Stable Diffusion in creative and technical fields.

Why Universities and Educators Love Open Source

In today’s rapidly evolving academic landscape, open-source technology is playing a critical role in helping universities and educators stay ahead of the curve. From fostering creativity to reducing costs, open-source platforms have become an essential part of higher education, especially in the fields of AI, art, and design. Read More

The Best Google Colab Alternative for AI Art Classes

As AI art continues to transform the creative landscape, educators are increasingly integrating tools like Stable Diffusion into their classrooms. While Google Colab has been a popular choice for running AI models, it’s not always the most user-friendly or efficient platform for educators. Read More

9. Meme and Fun Content

This category includes creating entertaining, imaginative, or shareable content. It’s ideal for casual use or social media.

- Meme Creation: Generate unique and shareable images for memes.

- Fantasy Worlds: Visualize fantasy settings, creatures, or scenarios.

- Reimagining Media: Create alternate versions of famous artworks, movie posters, or book covers.

10. Community and Collaboration

Community and collaboration tools encourage users to engage with the open-source ecosystem, sharing ideas, prompts, and technical innovations.

- Open Source Contribution: Experiment with code modifications or contribute new features/plugins.

- Collaborative Projects: Share prompts and workflows with the global Automatic1111 community.

See how Chivas Regal and RealDreams used ThinkDiffusion for their marketing campaign.

11. Professional Use Cases

Professional use cases focus on using Stable Diffusion for commercial purposes, such as advertising, marketing, or product visualization.

- Advertising and Marketing: Design social media content, ad visuals, and campaign imagery.

- E-commerce: Create product mockups or enhance listing images.

- Architectural Visualization: Generate conceptual designs for real estate or urban planning.

12. Advanced Features

Advanced features provide precise control over outputs, enabling experienced users to achieve sophisticated results.

- ControlNet Integration: Add control over poses, depth, or sketches to guide generation.

- ComfyUI Integration: Use node-based workflows for advanced customization.

- Image Masking: Precisely define areas of the image to modify or preserve.

- Multi-Conditioning: Blend multiple prompts to generate composite results.

All this flexibility makes Automatic1111 a powerful tool for artists, designers, educators, businesses, and AI enthusiasts.

Why use ThinkDiffusion's open-source cloud?

Unrestricted & Customizable

- Freely add any extensions to Automatic1111 and custom nodes to ComfyUI.

- Upload models, checkpoints, safetensors, LoRAs, embeddings, and textual inversions with one click from Civitai, Hugging Face, or a local computer.

- Each user can save settings that best support their workflow.

Full-Featured & Flexible

- Run multiple machines simultaneously.

- Train models and test workflows in parallel.

- Persistent dedicated drive for file organization.

Fast & Easy

- Save time with blazing fast speeds.

- No pricey hardware needed.

- No complex installs or maintenance required.

Automatic1111 Resources & Guides

Here are just a few of our many comprehensive Automatic1111 resources, including our free intro to Stable Diffusion course:

Automatic1111 Frequently Asked Questions

What is Automatic1111?

Automatic1111 is an open-source web user interface for running Stable Diffusion, a generative AI model for creating images from text prompts. It offers powerful features like text-to-image generation, image-to-image transformations, and support for extensions like ControlNet and LoRA.

How do I install Automatic1111?

- Install Python 3.10.6 or higher and Git.

- Clone the Automatic1111 repository from GitHub:bashCopy code

git clonehttps://github.com/AUTOMATIC1111/stable-diffusion-webui.gitcdstable-diffusion-webui - Place your Stable Diffusion model file (e.g.,

model.ckptormodel.safetensors) into themodels/Stable-diffusionfolder. - Run the installation script:bashCopy codepython launch.py

- Open the web interface at

http://127.0.0.1:7860in your browser.

Or you can skip the installation and launch it on ThinkDiffusion.

How do I install Stable Diffusion in Automatic1111?

Stable Diffusion models can be added to Automatic1111 by downloading the desired .ckpt or .safetensors file and placing it in the models/Stable-diffusion folder. Restart the app to detect the new model.

In ThinkDiffusion, Stable Diffusion is one of dozens of pre-installed models.

How do I successfully merge a pull request in Automatic1111?

- Fork the repository and clone it locally.

- Ensure your fork is up to date with the main branch of Automatic1111.

- Check out a new branch for your changes:bashCopy codegit checkout -b feature-branch

- Make your changes and commit them. Push the branch to your fork.

- Create a pull request in the original Automatic1111 repository. Ensure all tests pass and reviewers approve.

To merge a pull request:

- Repository owners use the GitHub interface to merge the request after approval.

- Use the

Merge CommitorSquash and Mergeoptions depending on the repository's contribution guidelines.

Or you can skip the installation hassle and launch it on ThinkDiffusion.

How do I change paths in Automatic1111?

Paths in Automatic1111 can be changed by editing the webui-user.bat or webui-user.sh file (depending on your OS). Update the COMMANDLINE_ARGS variable with specific paths for models, outputs, or configurations:

set COMMANDLINE_ARGS=--ckpt-dir /path/to/models --outdir /path/to/outputs

In ThinkDiffusion, we have a full featured graphic file browser to help you navigate your files like a pro.

How do I install transformers in Automatic1111?

Run the following command in the Automatic1111 environment: pip install transformers

This installs the Hugging Face Transformers library, which is required for some features like textual inversion and fine-tuning.

In ThinkDiffusion, you can natively upload textual inversions, LoRA's, hypernetworks, LyCORIS and more without any additional installations.

How do I update Automatic1111?

- Navigate to the directory where Automatic1111 is installed.

- Pull the latest changes from the GitHub repository:

git pull - Restart the application to apply updates.

In ThinkDiffusion, we automatically keep our versions of Automatic1111 up to date, and provide previous versions for convenience and flexibility.

How do I use LoRA in Automatic1111?

- Place your LoRA files (usually

.safetensors) into themodels/Loradirectory. - In the web interface, select the desired LoRA model under the "Extra Networks" tab or use the

lora:<model_name>syntax in your prompt. - Adjust the weights or blending settings to refine results.

In ThinkDiffusion, you can work with LoRA's exactly as you would locally, except without taking up your local storage space.

How do I train a LoRA in Automatic1111?

LoRA training is not natively supported in Automatic1111, but you can use additional tools like DreamBooth or the kohya_ss training scripts. Once trained, move the LoRA model to the models/Lora folder to use it.

Here's tutorial on training with Kohya

How do I use SDXL in Automatic1111?

- Download the SDXL models and place them in the

models/Stable-diffusiondirectory. - Restart Automatic1111 and select the SDXL model from the model dropdown.

- Customize your prompts and generation settings for SDXL.

In ThinkDiffusion, SDXL is one of dozens of pre-installed models.

How do I use Automatic1111?

We recommend taking our free intro to Stable Diffusion course here.

How do I add an upscaler to Automatic1111?

If you're running it locally,:

- Download the desired upscaler model (e.g., ESRGAN or Real-ESRGAN).

- Place the model files in the

models/ESRGANdirectory. - Restart Automatic1111 and choose the upscaler in the settings or image generation workflow.

On ThinkDiffusion, these upscalers are preinstalled and available in the "Extras" tab.

How do I install ControlNet in Automatic1111?

if you're running it locally:

- Install the ControlNet extension via the Extensions tab in Automatic1111.

- Download ControlNet models and place them in the

models/ControlNetfolder. - Restart the app, and the ControlNet features will be available in the UI.

On ThinkDiffusion, ControlNet is preinstalled and available along with many ControlNet models and preprocessors.

Member discussion