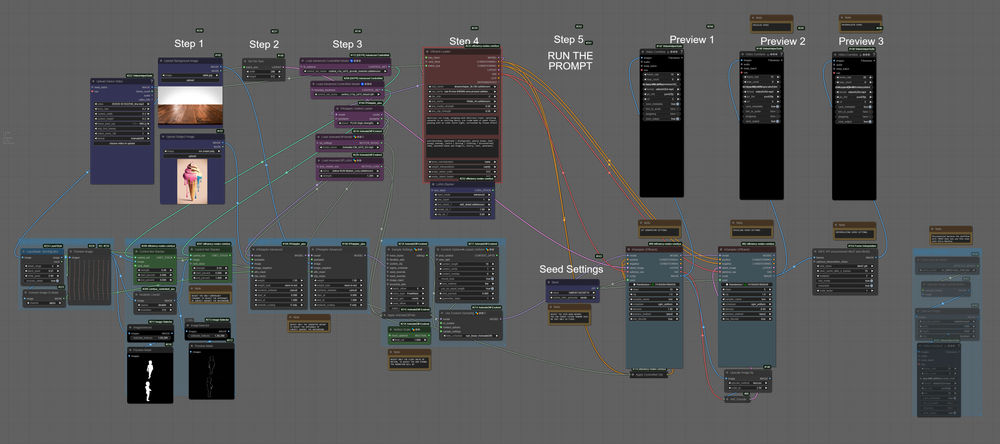

ComfyUI is an incredible tool for creating images and videos, but let's be honest - that learning curve can feel pretty steep when you're just starting out. The interface has tons of features which is great for flexibility, but can be overwhelming if you're new to all this.

To make your journey easier (and way more fun), we've put together this collection of 10 cool AI Text-to-Video and Video-to-Video workflows you can simply download and start using right away. Each one is designed for specific creative tasks, from turning text into videos to adding lip-sync to characters

These workflows are perfect for exploring ComfyUI’s full potential, whether you want to turn text prompts into dynamic videos or transform existing footage into entirely new visual styles.

| Category | Description | Link |

|---|---|---|

| Hunyuan Text2Video workflow | The Hunyuan Text2Video workflow in ComfyUI turns text prompts into high-quality, visually rich videos quickly and easily. | View Now |

| Mochi Text2Video workflow | The Mochi Text2Video workflow in ComfyUI quickly turns text prompts into smooth, high-quality videos with accurate motion and style. | View Now |

| LTX Text2Video workflow | The LTX Text2Video workflow in ComfyUI turns text prompts into smooth, high-quality videos with consistent motion and visuals. | View Now |

| Wan Video2Video Depth Control LoRA workflow | The Wan Video2Video Depth Control LoRA workflow in ComfyUI uses depth maps to add realistic structure and cinematic quality to your videos. | View Now |

| LatentSync Video2Video workflow | The LatentSync Video2Video workflow in ComfyUI creates videos with natural, high-quality lip sync by matching audio to a character’s mouth. | View Now |

| LTX Video2Video workflow | The LTX Video2Video workflow in ComfyUI quickly transforms existing videos into high-quality clips with smooth motion and customizable style. | View Now |

| Hunyuan Video2Video workflow | The Hunyuan Video2Video workflow in ComfyUI turns existing videos into high-quality, dynamic clips by blending your text prompts with the original motion and style. | View Now |

| Dance Noodle Video2Video workflow | The Dance Noodle Video2Video workflow in ComfyUI animates noodles with dance motions, creating fun and playful videos using Comfy. | View Now |

| Wan Video2Video Depth Control LoRA workflow | The Wan Depth Control LoRA workflow in ComfyUI uses depth maps to guide Wan 2.1, enabling structured, realistic video generation with accurate depth and minimal computational overhead | View Now |

| Wan Video2Video Style Transfer workflow | The Wan Video2Video with Style Transfer workflow in ComfyUI transforms videos by applying the visual style of a reference image while preserving original motion and content. | View Now |

No installs. No downloads. Run ComfyUI workflows in the Cloud.

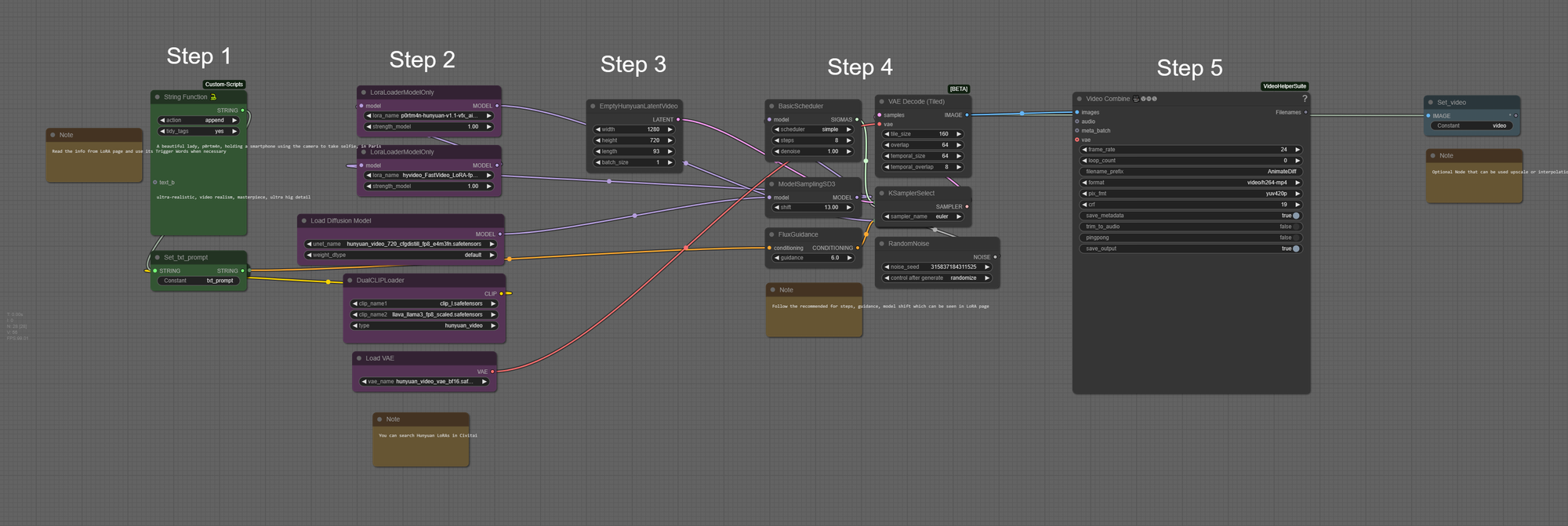

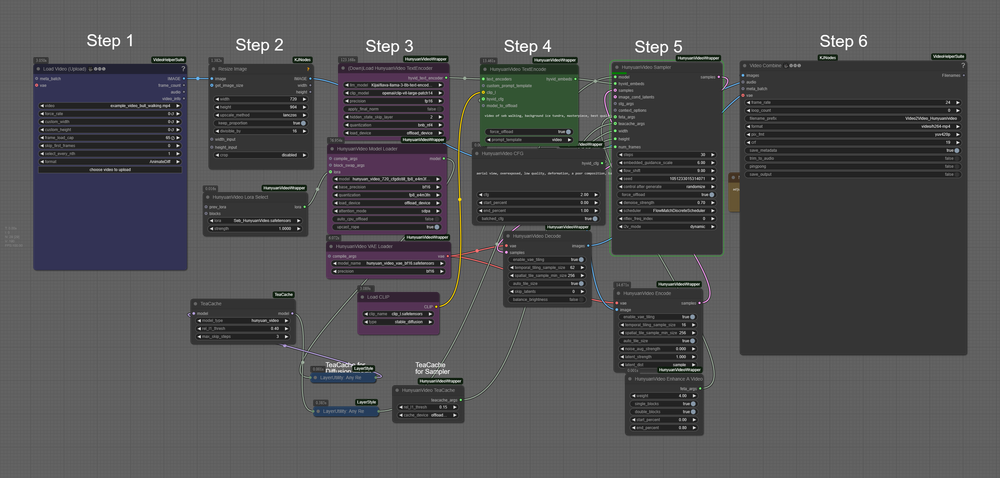

Hunyuan Text2Video workflow

What it's great for:

Hunyuan Text-to-Video is an advanced open-source AI tool from Tencent that turns simple text descriptions into high-quality, professional videos within minutes.

- Turns text descriptions into high-quality videos quickly

- Great for product demos, storytelling, and character animations

- Videos maintain smooth motion and visual consistency

- Uses a text encoder with a hybrid transformer model for accurate results

- Perfect for marketing, social media, and educational content

Hunyuan excels at transforming prompts into detailed scenes—whether you need product demos, creative storytelling, character animations, or promotional clips. Its unique architecture combines a strong text encoder with a hybrid transformer model, ensuring that the generated videos closely match your written instructions, maintain smooth motion, and stay visually consistent from start to finish.

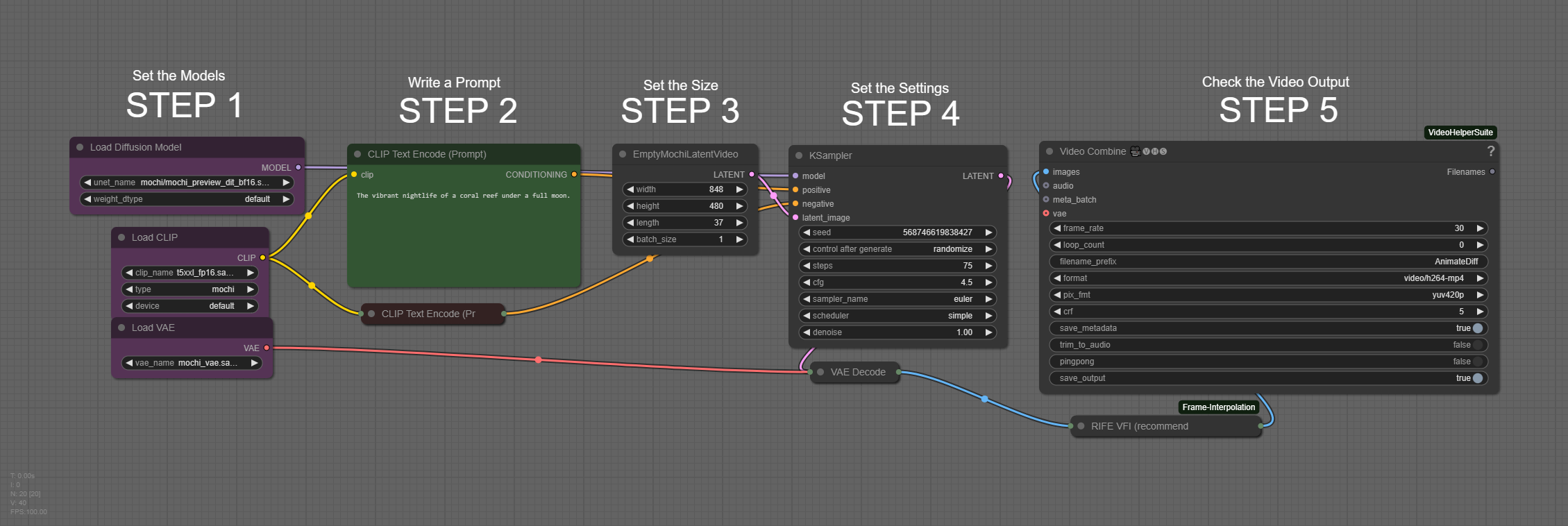

Mochi Text2Video workflow

What it's great for:

- Creates videos with smooth, realistic motion from text prompts

- Offers precise control over characters, scenes, and styles

- Follows instructions closely for accurate results

- Excels at detailed visuals and natural movement

- Ideal for creators, marketers, and educators

Mochi Text-to-Video excels at following user instructions closely, making it great for anyone who wants precise control over characters, scenes, and styles in their video projects. It’s especially good for creators, marketers, educators, and developers who need to quickly produce engaging content for storytelling, marketing, social media, education, or prototyping new ideas.

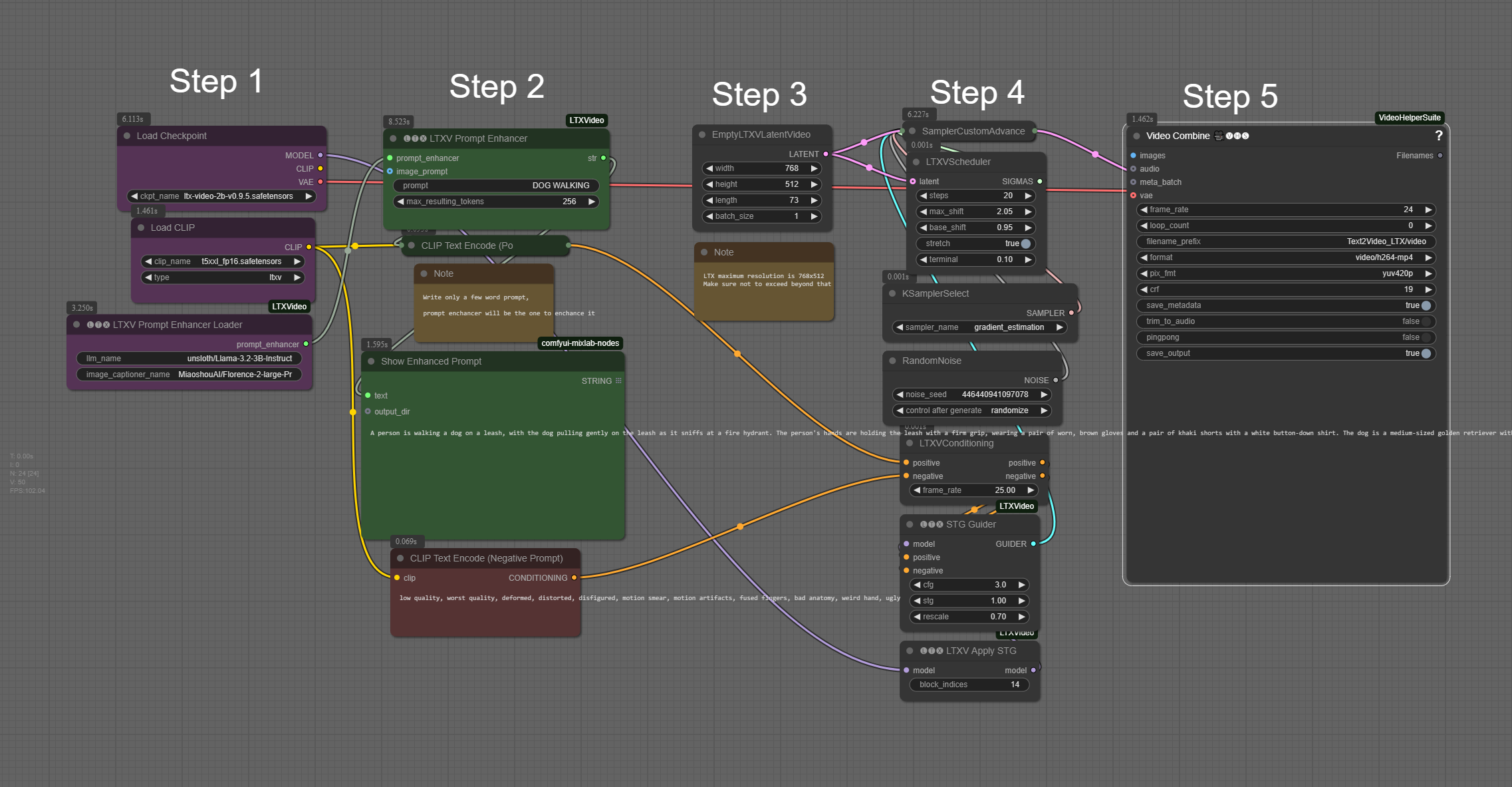

LTX Text2Video workflow

What it's great for:

- Quickly generates videos from scripts or blog posts

- Creates dynamic visuals and camera movements without editing skills

- Lets you define style, cast, backgrounds, and timing

- Works at 24fps with high-quality output

- Perfect for content creators wanting to visualize written content

LTX speeds up the video-making process so you can focus on what really matters - telling your story and connecting with your audience. LTX is the fastest model out there, and generation quality can be slightly low but it's great for quick iterations and pre-viz usecases.

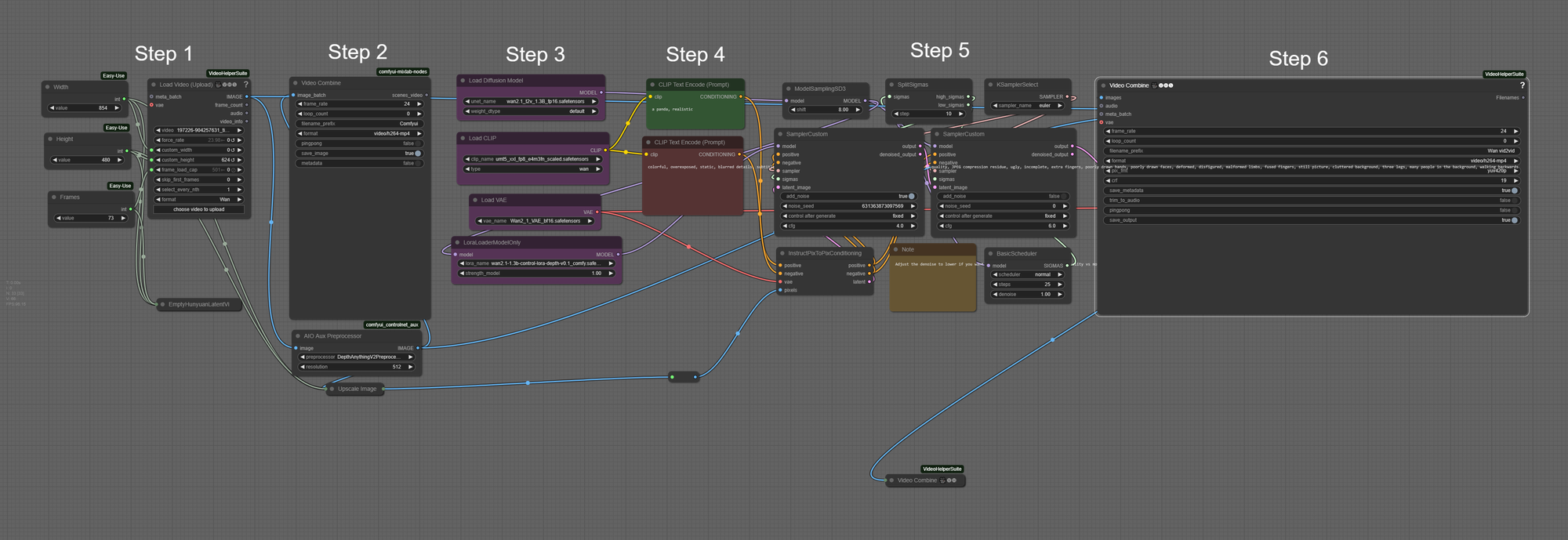

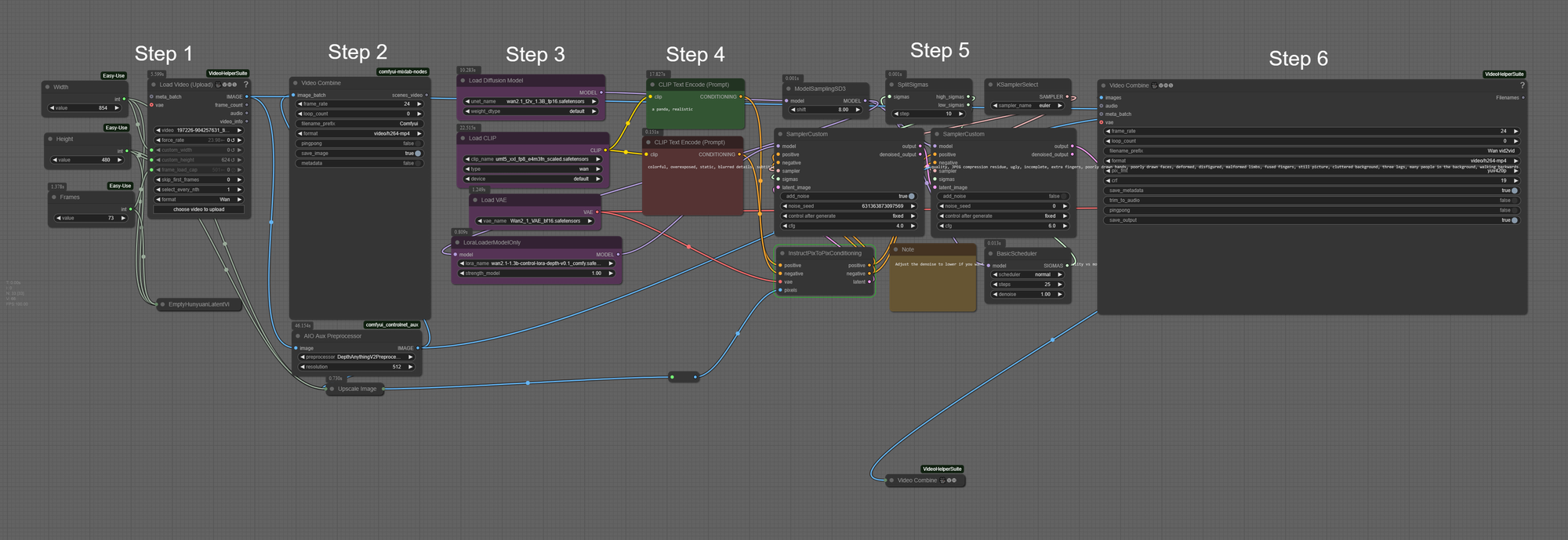

Wan Video2Video Depth Control LoRA workflow

What it's great for:

- Adds realistic depth and structure to videos

- Maintains consistent spatial relationships throughout videos

- Creates more natural and visually appealing results

- Ideal for architectural walkthroughs and cinematic projects

- Works with minimal setup for maximum creative flexibility

Wan2.1 Depth Control LoRA is a lightweight and efficient tool designed to give creators precise control over the structure and depth of AI-generated videos using the Wan2.1 model. It is ideal for both professionals and hobbyists who want to create high-quality, depth-aware videos with minimal setup and maximum creative flexibility.

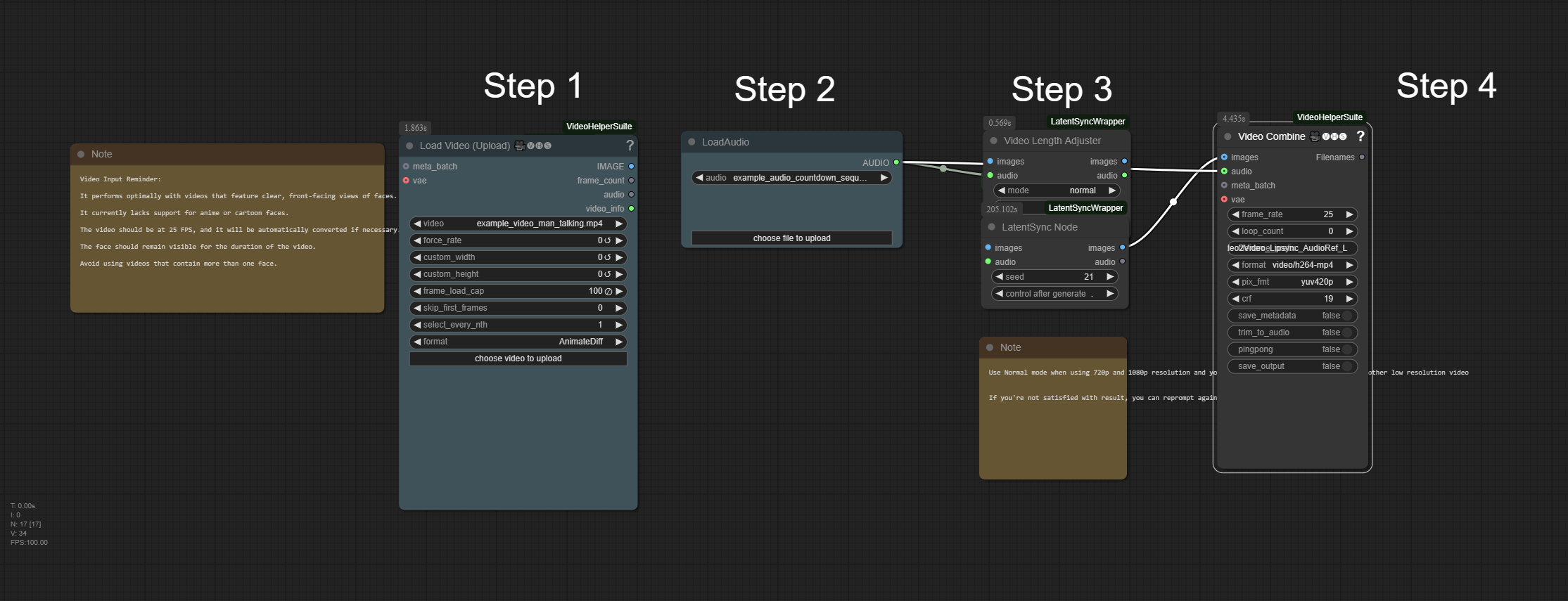

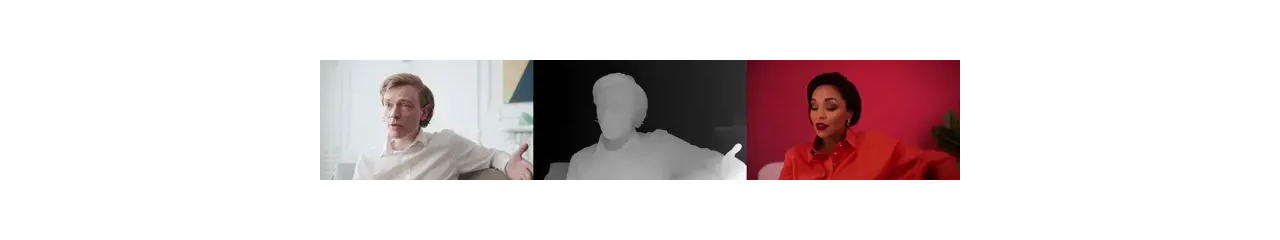

LatentSync Video2Video workflow

Click Video to Play Sound

What it's great for:

- Creates natural lip-sync by matching audio to mouth movements

- Uses TREPA (Temporal Representation Alignment) for accurate results

- Produces smooth, flicker-free videos

- Works across multiple languages

- Perfect for dubbing, virtual avatars, and character animations

LatentSync is an open-source AI tool from ByteDance that specializes in advanced lip synchronization for videos. What makes it special is its use of a latent diffusion model and a feature called TREPA (Temporal Representation Alignment), which together ensure highly accurate, natural-looking lip movements and smooth, flicker-free video.

LatentSync works efficiently on regular GPUs and can handle multiple languages, making it ideal for film dubbing, virtual avatars, gaming, advertising, content creation, education, and creative projects. Its ability to create realistic, expressive facial animations quickly and easily sets it apart as a powerful solution for anyone needing professional-grade lip sync in their videos.

No installs. No downloads. Run ComfyUI workflows in the Cloud.

LTX Video2Video workflow

What it's great for:

- Transforms existing videos or images into new styles

- Generates high-res videos at 24fps (sometimes faster than playback)

- Maintains smooth motion and consistent visuals

- Reduces common AI problems like flickering

- No advanced video editing skills needed

LTX’s video-to-video feature is great for quickly turning static images or simple video clips into dynamic, professional-quality videos with smooth motion and consistent visuals.

LTX Video (also known as LTXV) stands out for its real-time generation speed, producing high-resolution videos at 24 frames per second, sometimes even faster than playback, while keeping the quality high and reducing common AI problems like flickering or inconsistent frames.

In short, LTX is perfect for anyone who wants to generate creative, high-quality videos quickly and easily.

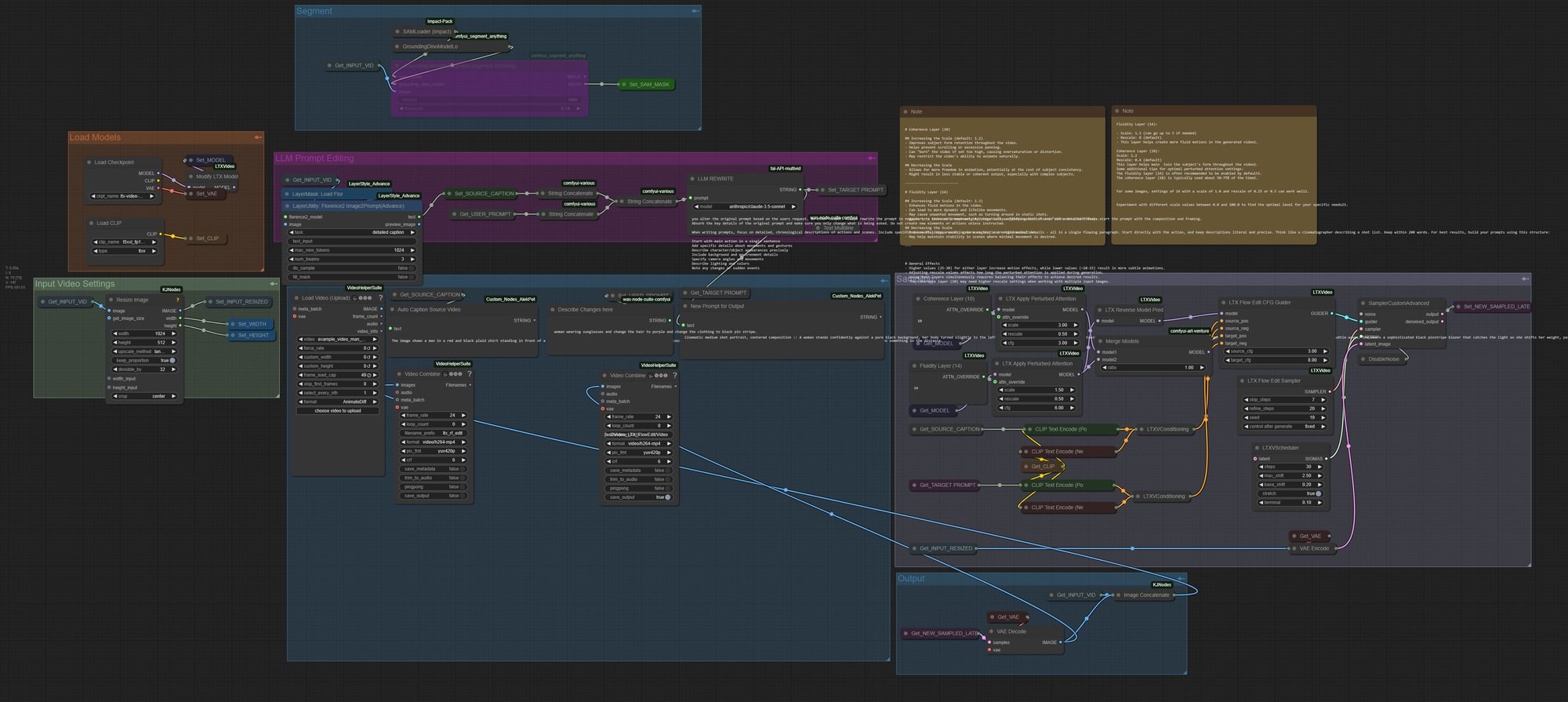

Hunyuan Video2Video workflow

What it's great for:

- Blends motion/structure of source video with new text prompts

- Preserves original motion flow while adding new styles and effects

- Uses a 13B parameter model and 3D Variational Autoencoder

- Creates stable, diverse outputs

- Versatile for various creative applications

Hunyuan Video is an advanced open-source framework that transforms video content by blending the motion and structure of a source video with user-defined text prompts. Utilizing a powerful 13 billion parameter model and a 3D Variational Autoencoder, it produces high-quality, stable, and diverse outputs.

Dance Noodle Video2Video workflow

What it's great for:

- Adds fun animation styles to ordinary objects (like dancing noodles!)

- Creates eye-catching motion effects

- Perfect for social media content that grabs attention

- Works for marketing campaigns needing visual impact

- Turns everyday footage into memorable animations

Wan Video2Video Depth Control Lora Workflow

What it's great for:

- Suitable for both simple projects and complex animations.

- Helps produce visually consistent and professional-looking videos.

- Minimal computational requirements compared to heavier tools like ControlNet.

- For creating dynamic, structured animations and storytelling.

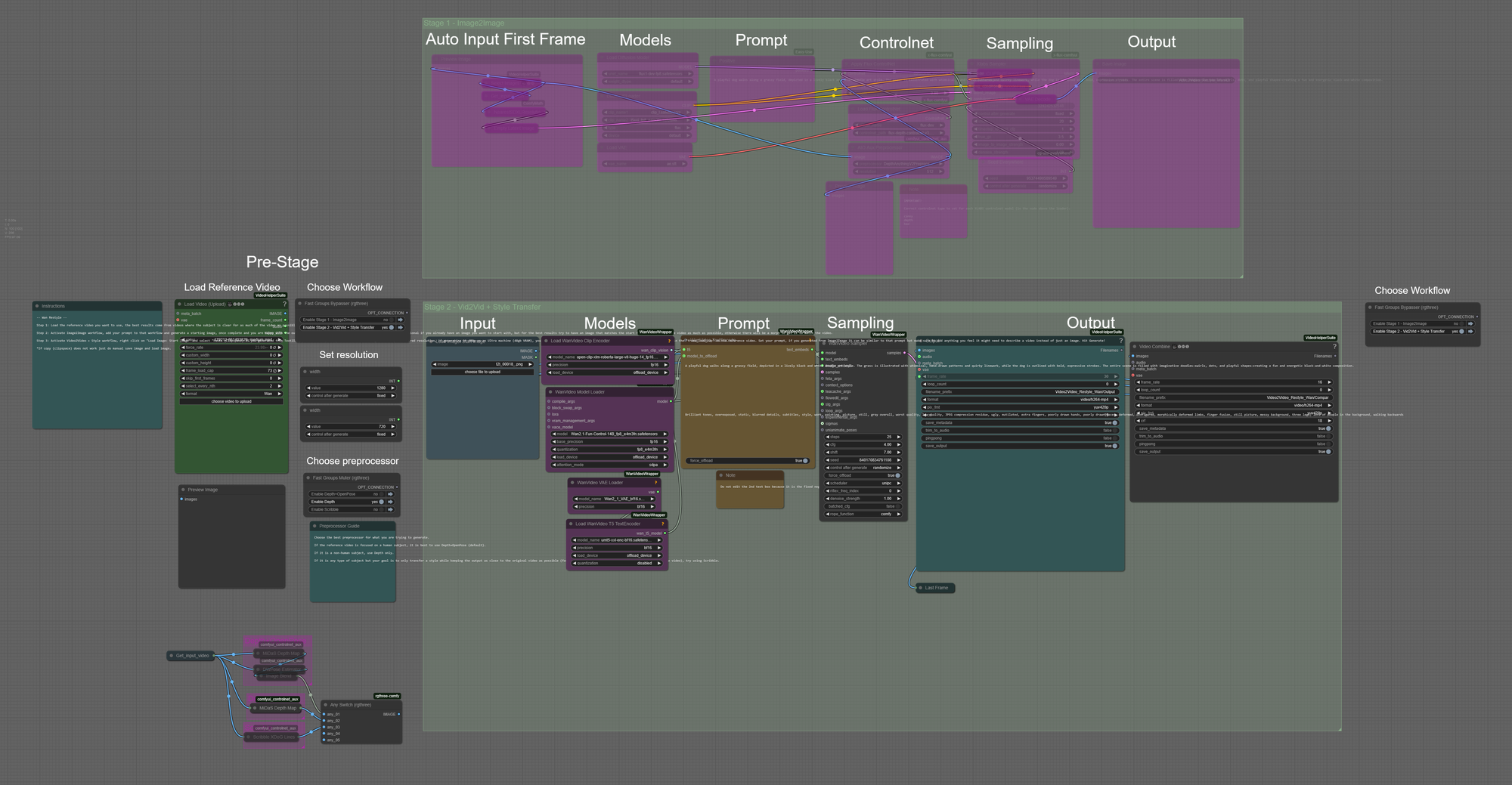

Wan Video2Video Style Transfer

What it's great for:

- Maintains original motion, structure, and content while transforming the appearance for a hand-painted, animated, or custom artistic effect.

- Ensures frame-to-frame consistency, preventing flickering and keeping the style coherent.

- Allows for detailed customization through prompts and model settings, enabling creative control over the final output.

- Artists, animators, enthusiasts and professionals can have a benefit with this. Those wants to stand out with visually distinctive, eye-catching video content.

If your computer's struggling or installation is giving you headaches, try these workflows in your browser with ThinkDiffusion. We provide the GPU power so you can focus on creating.

Enjoy experimenting with these workflows! And remember - every pro started as a beginner once.

If you enjoy ComfyUI and you want to test out HyperSD in ComfyUI and Blender in real-time, then feel free to check out this Real-Time Creativity: Leveraging Hyper SD and Blender with ComfyUI. And have fun out there with your videos!

Member discussion