Hey there, video enthusiasts! It’s a thrill to see how quickly things are changing, especially in the way we create videos. Picture this: with just a few clicks, you can transform your existing clips into fresh, creative videos. Sounds fun, right? Whether you're just starting out or already a pro, Hunyuan is here to unleash your creativity. In this guide, we'll explore how this awesome custom node is shaking things up, making it easier than ever to tell your story through video. So, buckle up and get ready to explore how you can take your video projects to the next level with Hunyuan!

How to run HunYuan in ComfyUI

Installation guide

For local installs, there are additional steps required that are beyond the scope of this resource.

Custom Node

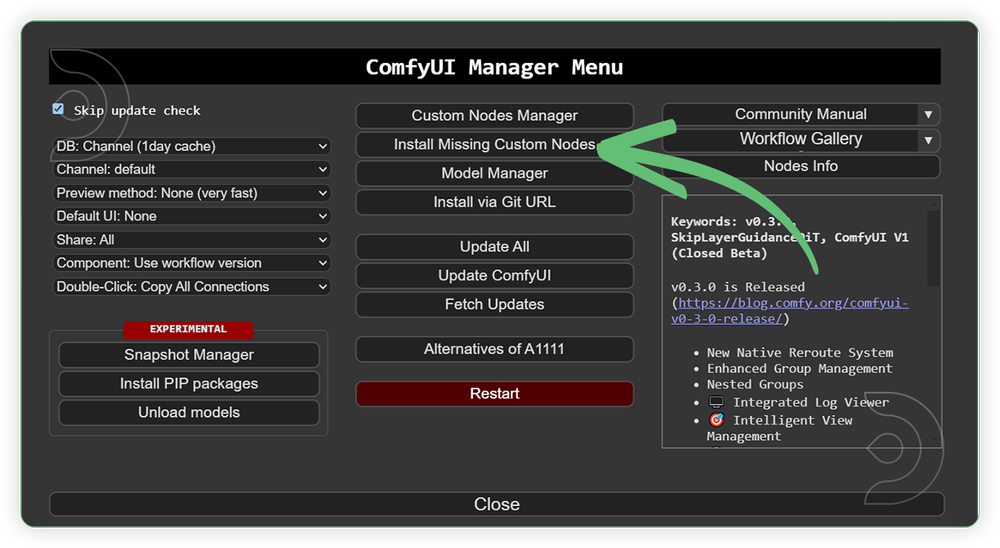

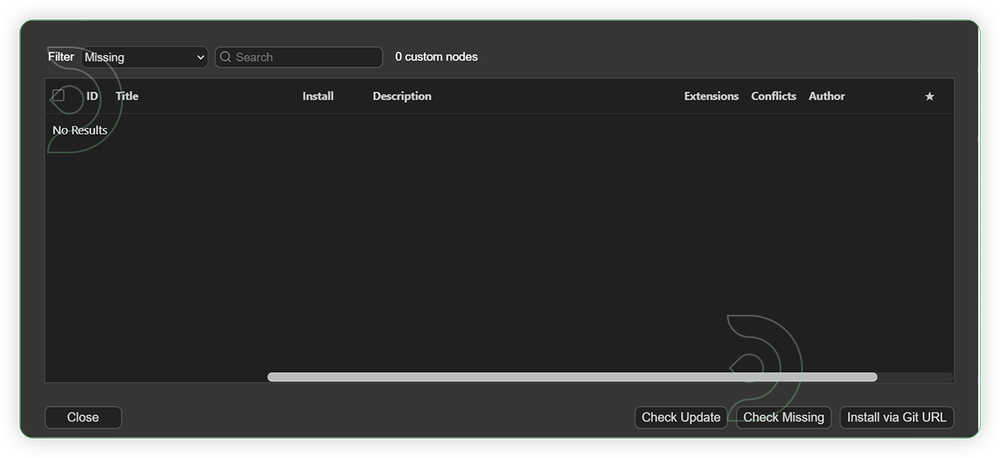

If there are red nodes in the workflow, it means that the workflow lacks the certain required nodes. Install the custom nodes in order for the workflow to work.

- Go to ComfyUI Manager > Click Install Missing Custom Nodes

- Check the list below if there's a list of custom nodes that needs to be installed and click the install.

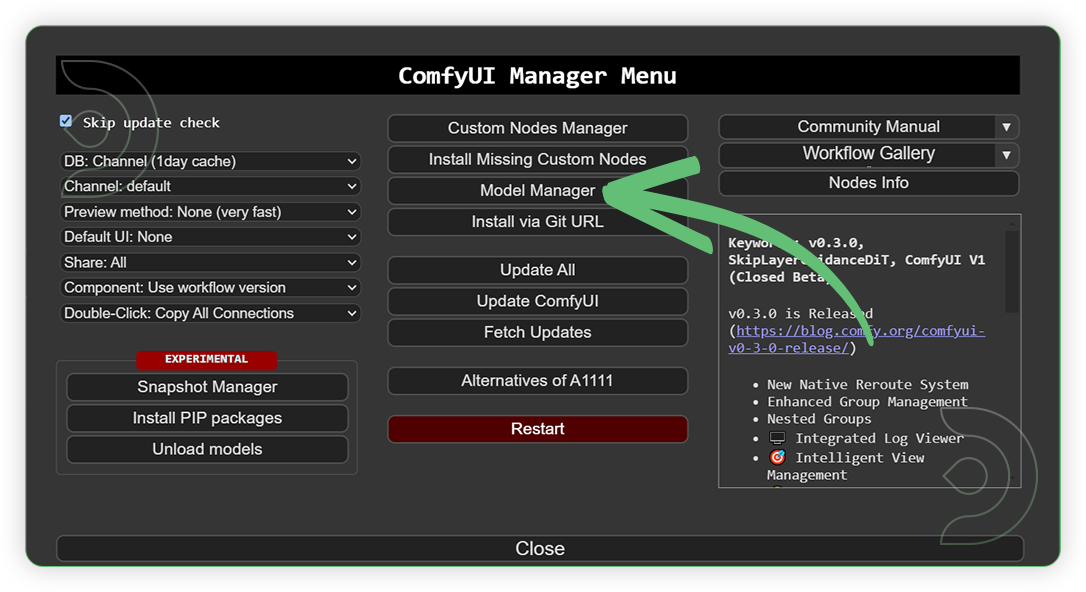

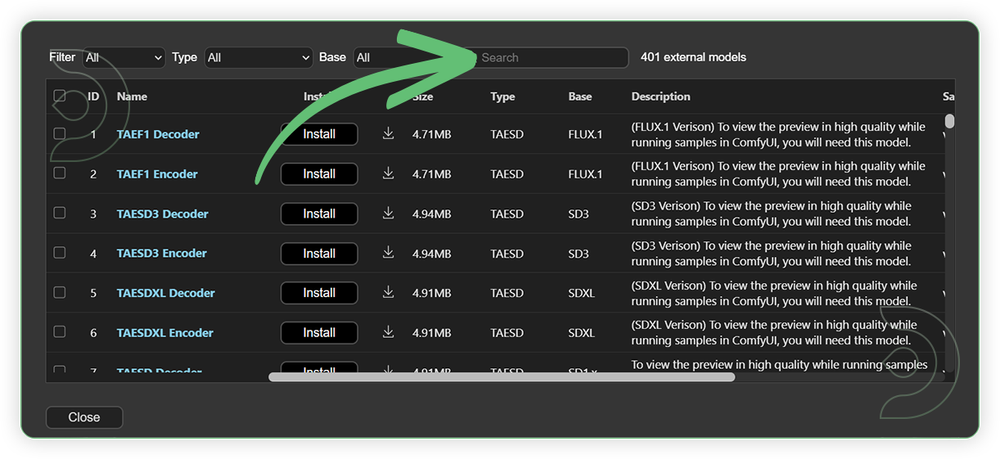

Models

Download the recommended models (see list below) using the ComfyUI manager and go to Install models. Refresh or restart the machine after the files have downloaded.

- Go to ComfyUI Manager > Click Model Manager

- When you find the exact model that you're looking for, click install and make sure to press refresh when you are finished.

Model Path Source

Use the model path source if you prefer to install the models using model's link address and paste into ThinkDiffusion MyFiles using upload URL.

| Model Name | Model Link Address | ThinkDiffusion Upload Directory |

|---|---|---|

| llava-llama-3-8b-text-encoder-tokenizer | Auto Download with Node

|

Auto Upload with Node |

| clip-vit-large-patch14 | Auto Download with Node |

Auto Upload with Node |

| hunyuna_video_720_cfgdistill_fp8_e4m3fn.safetensors | ...comfyui/models/diffusion_models/ |

|

| hunyuan_video_vae_bf16.safetensors | ...comfyui/models/vae/ |

Step-by-step guide for Hunyuan in ComfyUI

| Steps | Recommended Nodes |

|---|---|

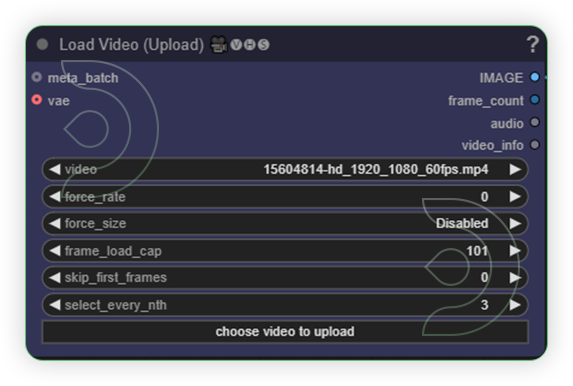

| 1. Load a Video Load a video that contains a main subject such as an object, person or animal. |

|

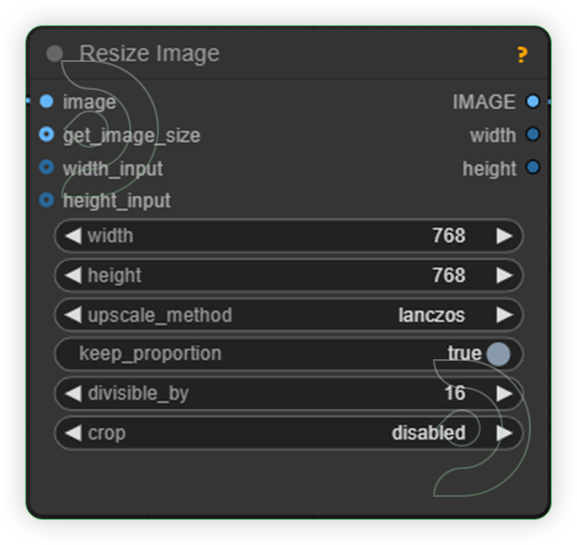

| 2. Set the Size You can set with 1280 x 720 resolution. Hunyuan supports resolution up to 720p. Disable the keep proportion in order for the Image Resize will take effect. |

|

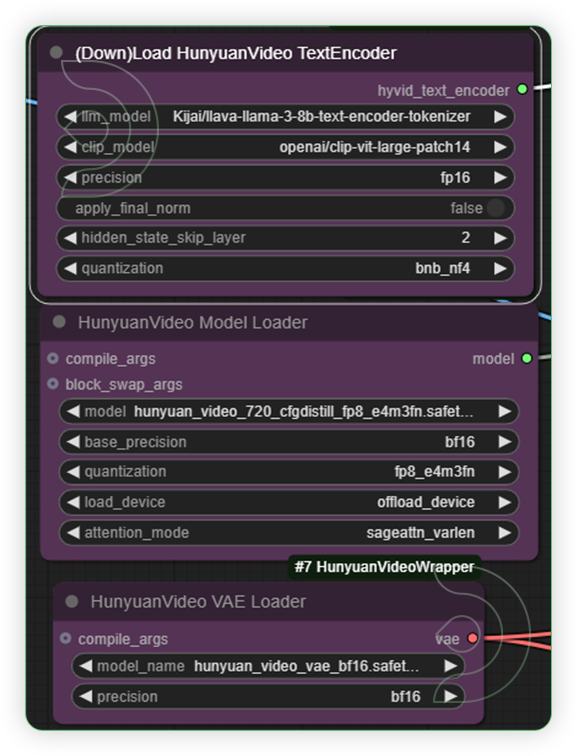

| 3. Set the Models Should follow the recommended models as shown in the image. Otherwise, the video generation will not work. |

|

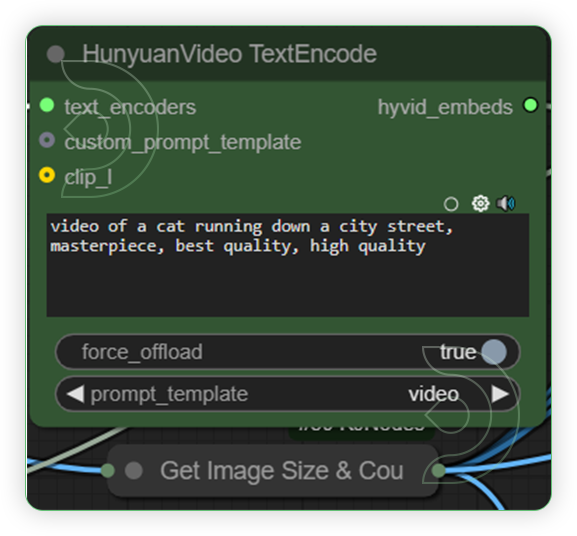

| 4. Write a Prompt Write a prompt what you want to be on the subject |

|

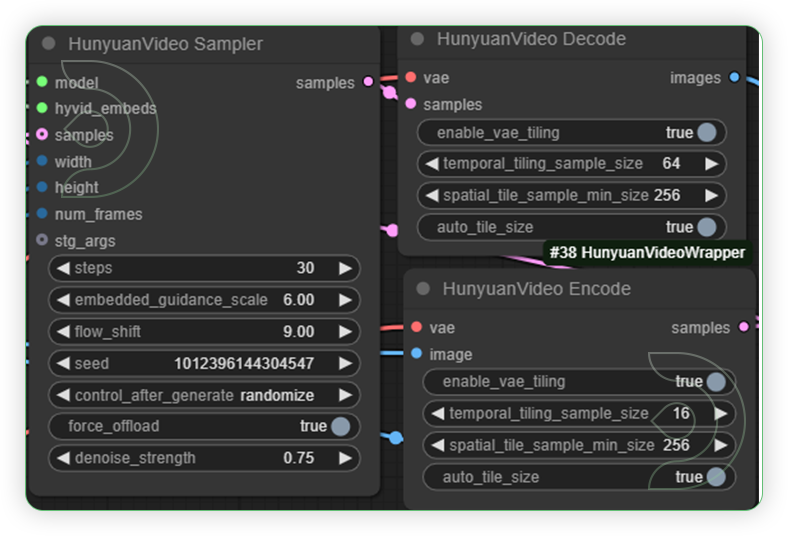

| 5. Set the Generation Settings Generation results may vary. You can tweak the denoise strength and the sweet spot around 0.60 to 0.75. |

|

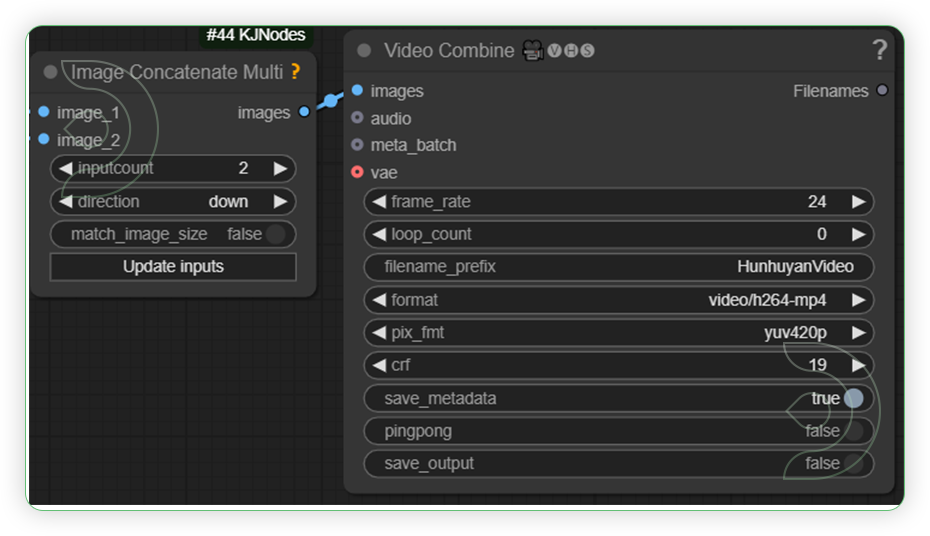

| 6. Check the Video Output |  |

Examples

Chopper to Dragon

- Prompt - video of a flying red dragon flying across the sky, masterpiece, best quality, high quality

- Seed - 51574714768493

- Steps - 50

- Denoise - 0.60

- Frames - 101

Bird to Chicken

- Prompt - video of a (chicken:2) walking in the forest, masterpiece, best quality, high quality

- Seed - 721727012037475

- Steps - 40

- Denoise - 0.65

- Frames - 101

Man to Woman

- Prompt - video of a woman smiling, masterpiece, best quality, high quality

- Seed - 668267799746432

- Steps - 30

- Denoise - 0.60

- Frames - 101

If you’re having issues with installation or slow hardware, you can try any of these workflows on a more powerful GPU in your browser with ThinkDiffusion.

If you enjoy ComfyUI and you want to test out creating awesome animations, then feel free to check out this AnimateDiff tutorial here. And have fun out there with your noodles!

Member discussion