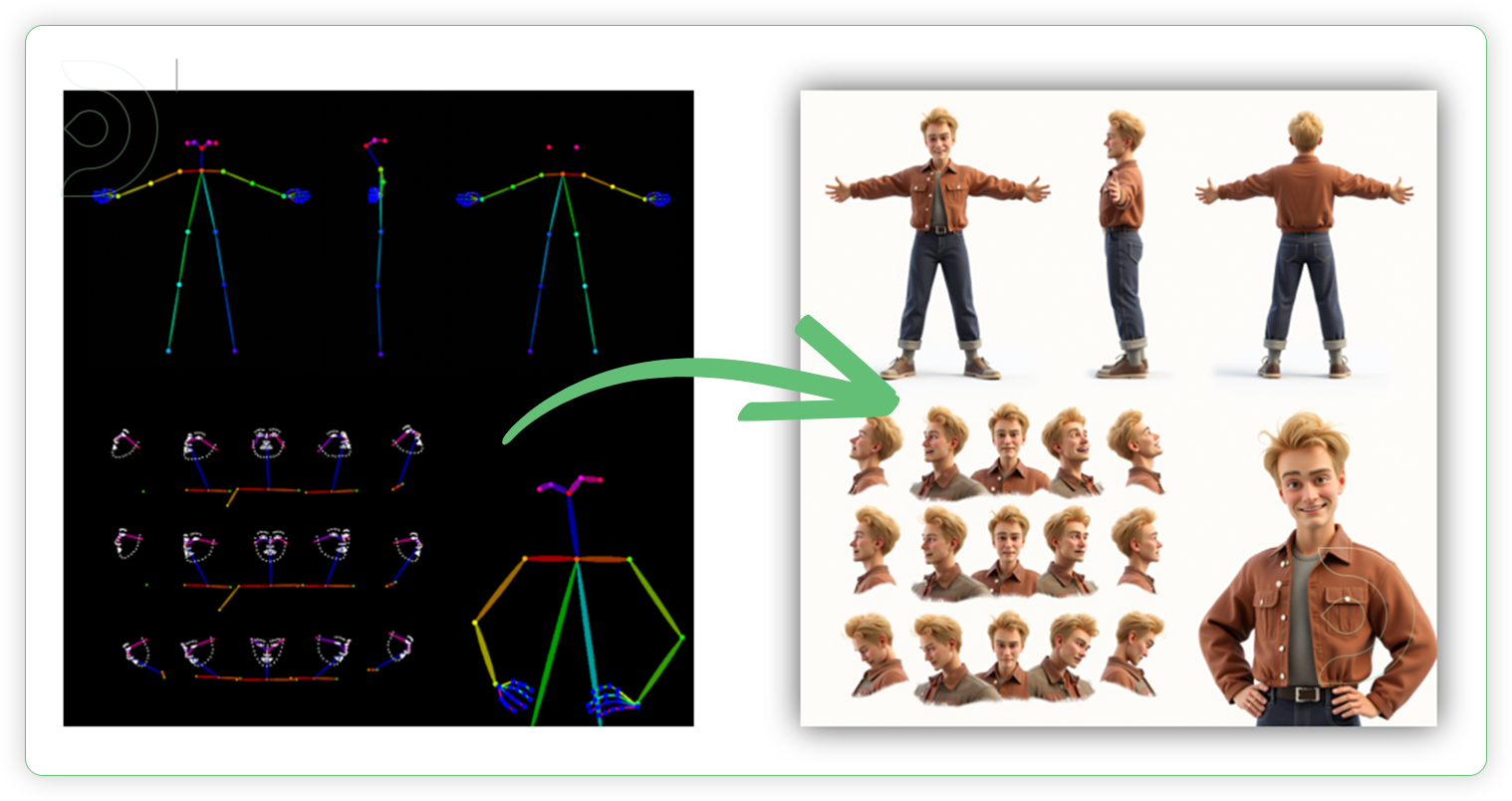

Ever find yourself struggling to keep your characters looking the same across different projects? You're not alone! Creating characters that look the same every time is crucial for a cohesive story.

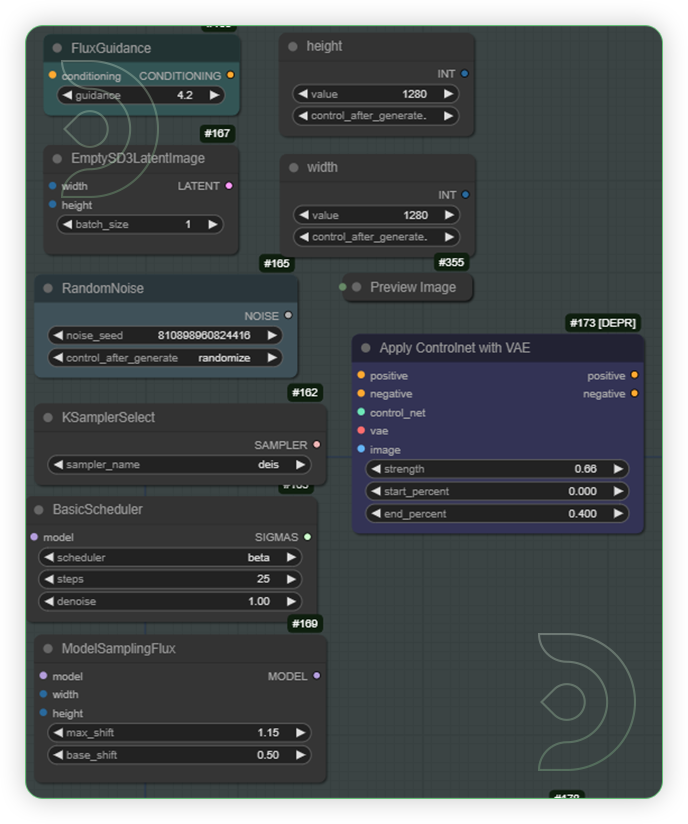

This guide will show you how to maintain consistency by using a workflow in ComfyUI. You can save time and focus more on the creative aspects.

Let's explore how these resources can elevate your designs to the next level, bringing your ideas to life with consistency and flair. We'd like to credit Mickmumpitz for his outstanding work in the development of this workflow.

How to Use

One-Time Setup

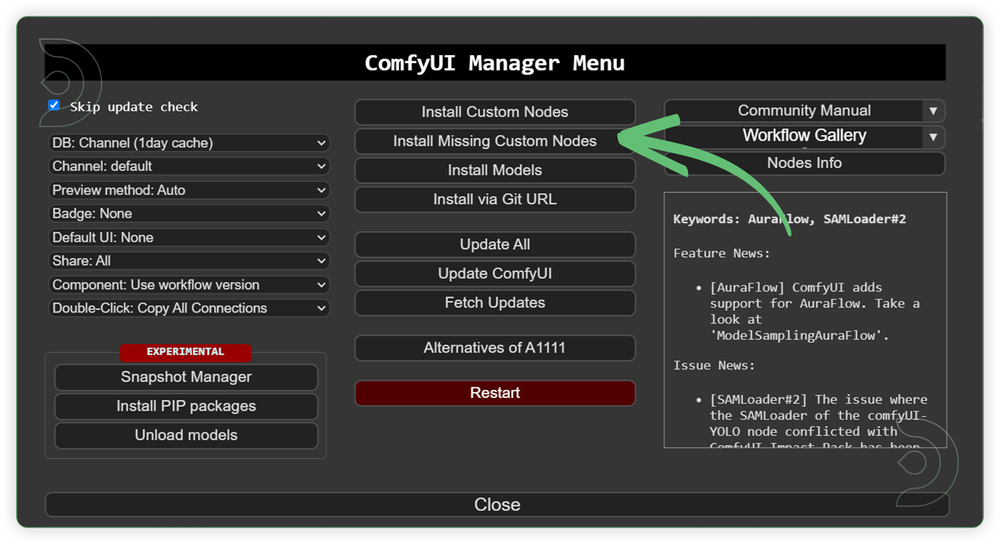

Custom Node

If there are red nodes in the workflow, it means that the workflow lacks the certain required nodes. Install the custom nodes in order for the workflow to work.

- Go to ComfyUI Manager > Click Install Missing Custom Nodes

- Check the list below if there's a list of custom nodes that needs to be installed and click the install.

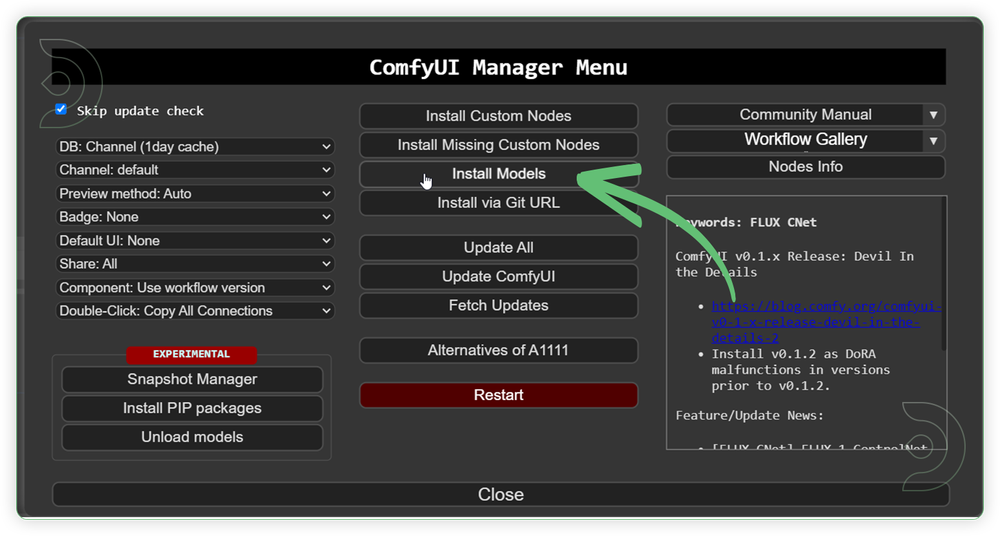

Models

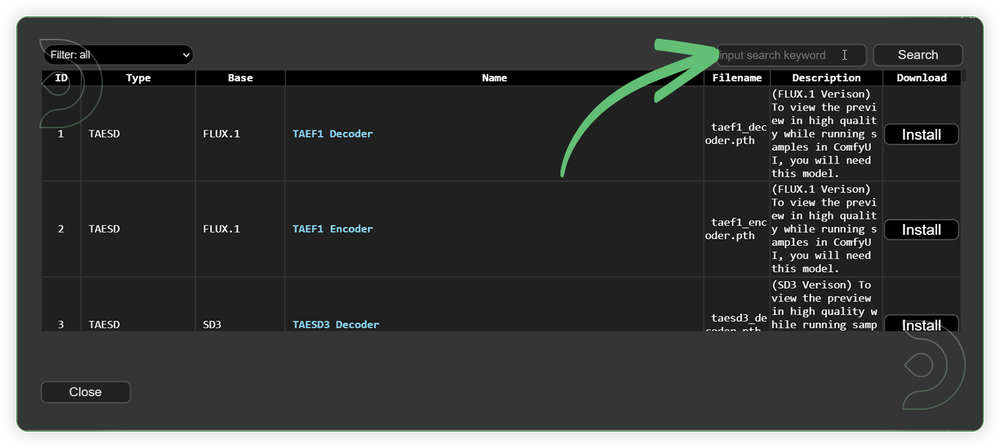

Download the recommended models (see list below) using the ComfyUI manager and go to Install models. Refresh or restart the machine after the files have downloaded.

- Go to ComfyUI Manager > Click Install Models

2. When you find the exact model that you're looking for, click install and make sure to press refresh when you are finished.

Model Path Source

Use the model path source if you prefer to install the models using model's link address and paste into ThinkDiffusion MyFiles using upload by URL method.

| Model Name | Model Link Address |

|---|---|

| flux1-dev-fp8.safetensors | |

| t5xxl_fp8_e4m3fn.safetensors | |

| clip_l.safetensors | |

| ae.sft or ae.safetensors | |

| flux_shakker_labs_union_pro-fp8_e4m3fn.safetensors | |

| 4x-ClearRealityV1.pth | |

| face_yolov8m.pt |

Embedded JavaScript

Guide Table for Upload

Procedures

| Steps | Default Node |

|---|---|

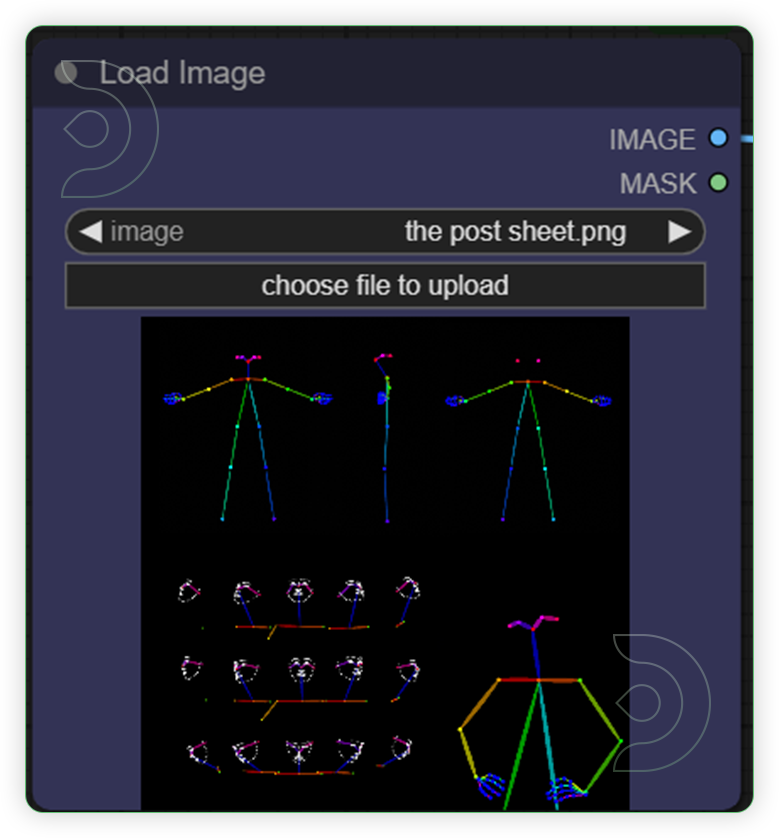

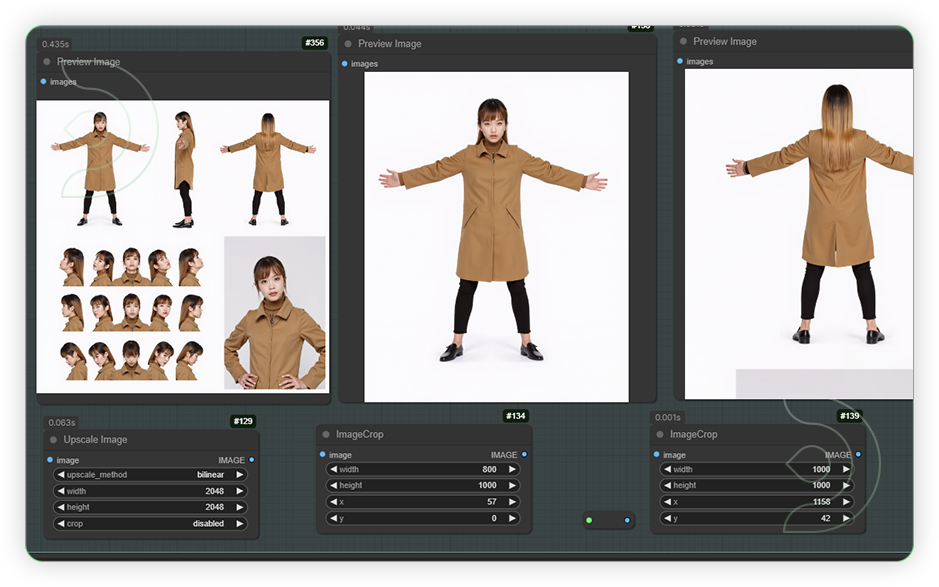

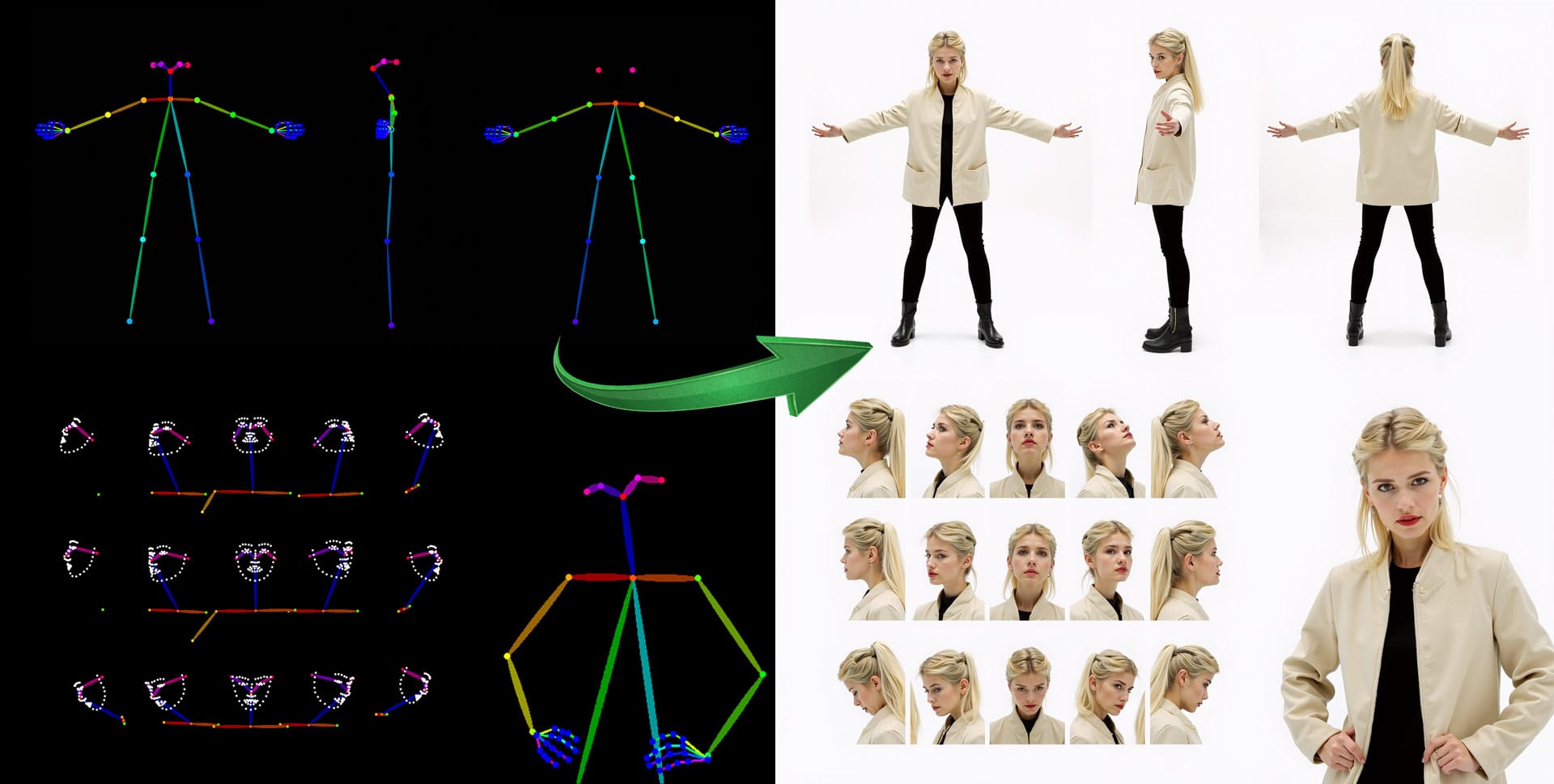

| Load the pose sheet image (find the pose sheet image above next to the workflow) |  |

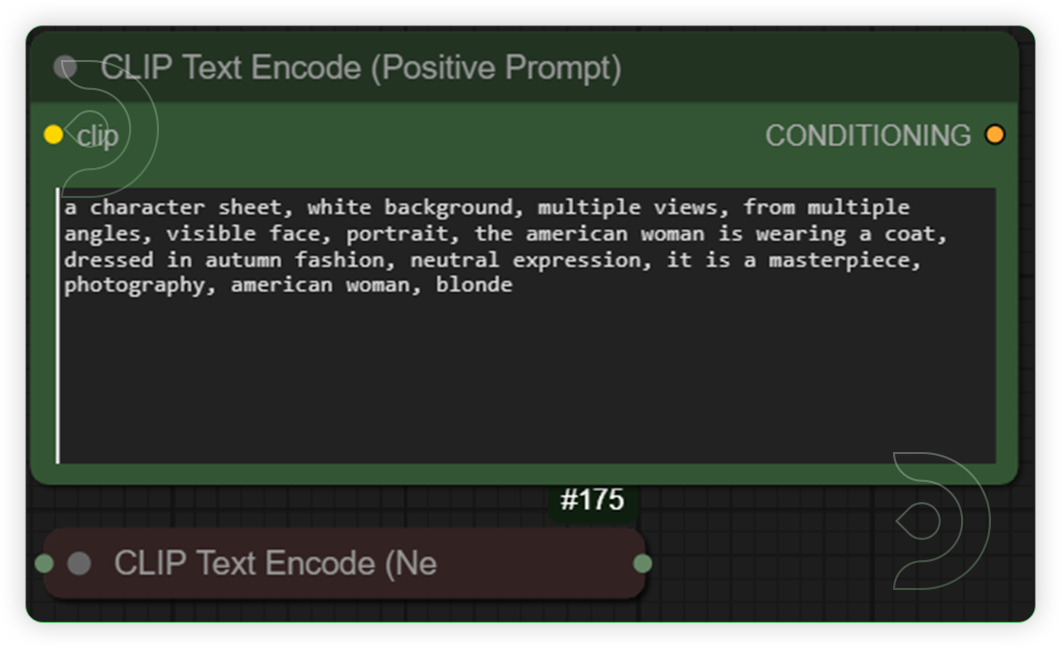

| Write a Prompt |  |

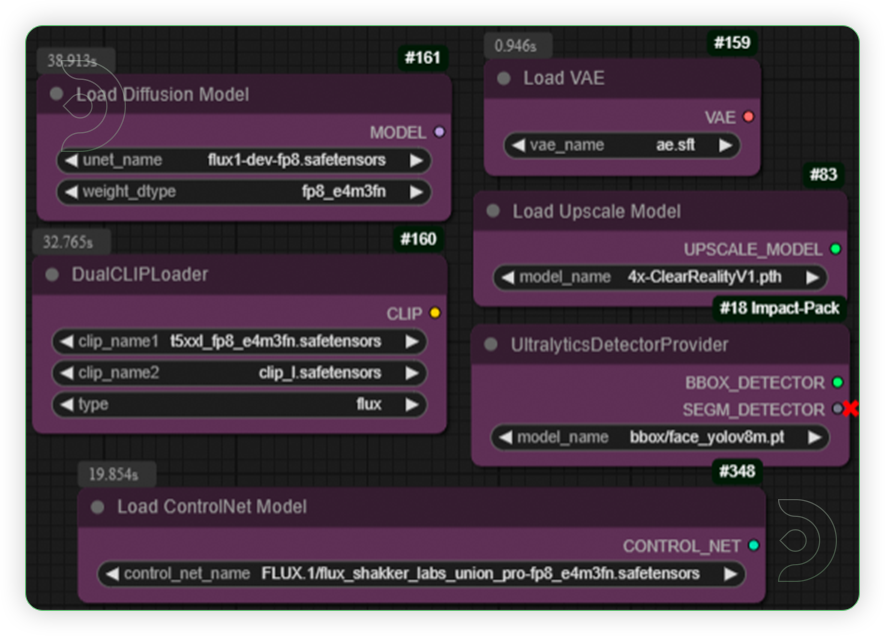

| Set the Models |  |

| Check the Generation Settings |  |

| Check the Default Settings for Image Enlargement and Face Repair |  |

| Check the Generated Image and its Poses |  |

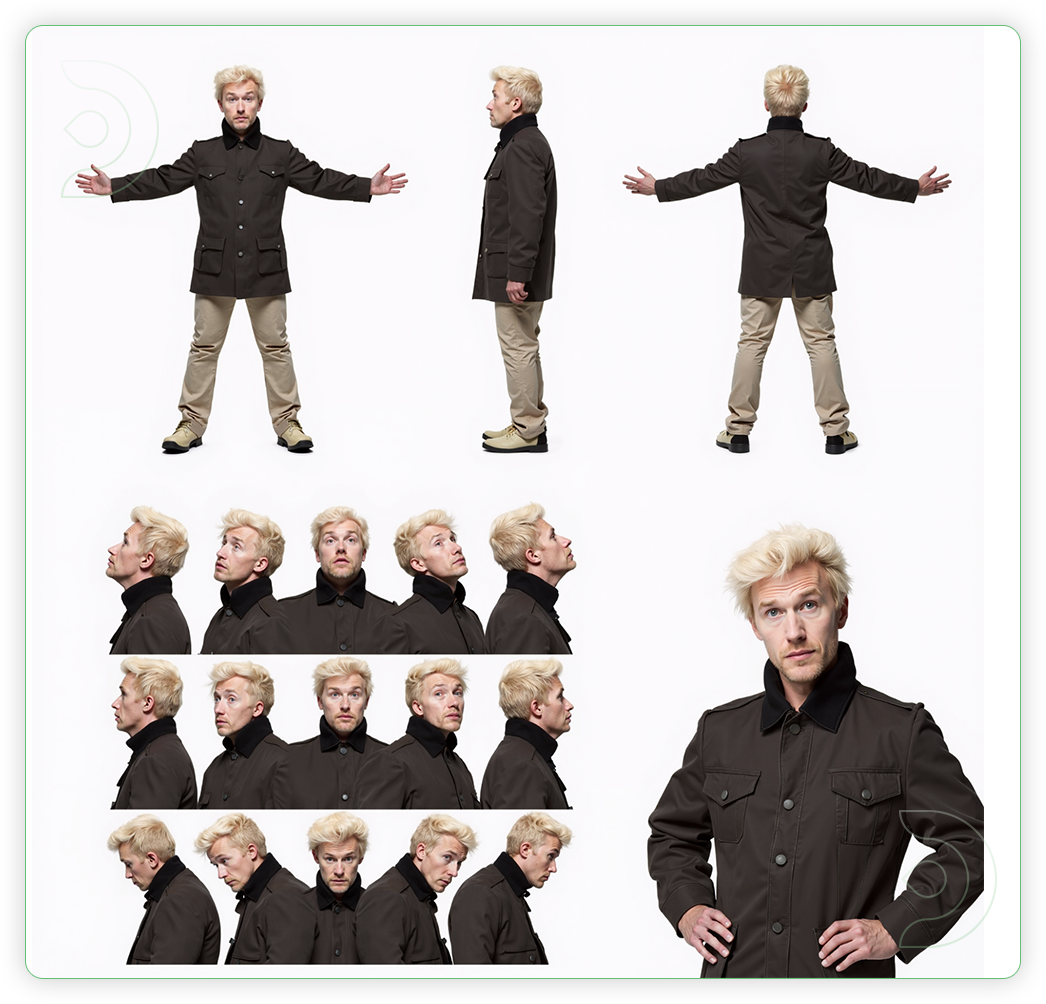

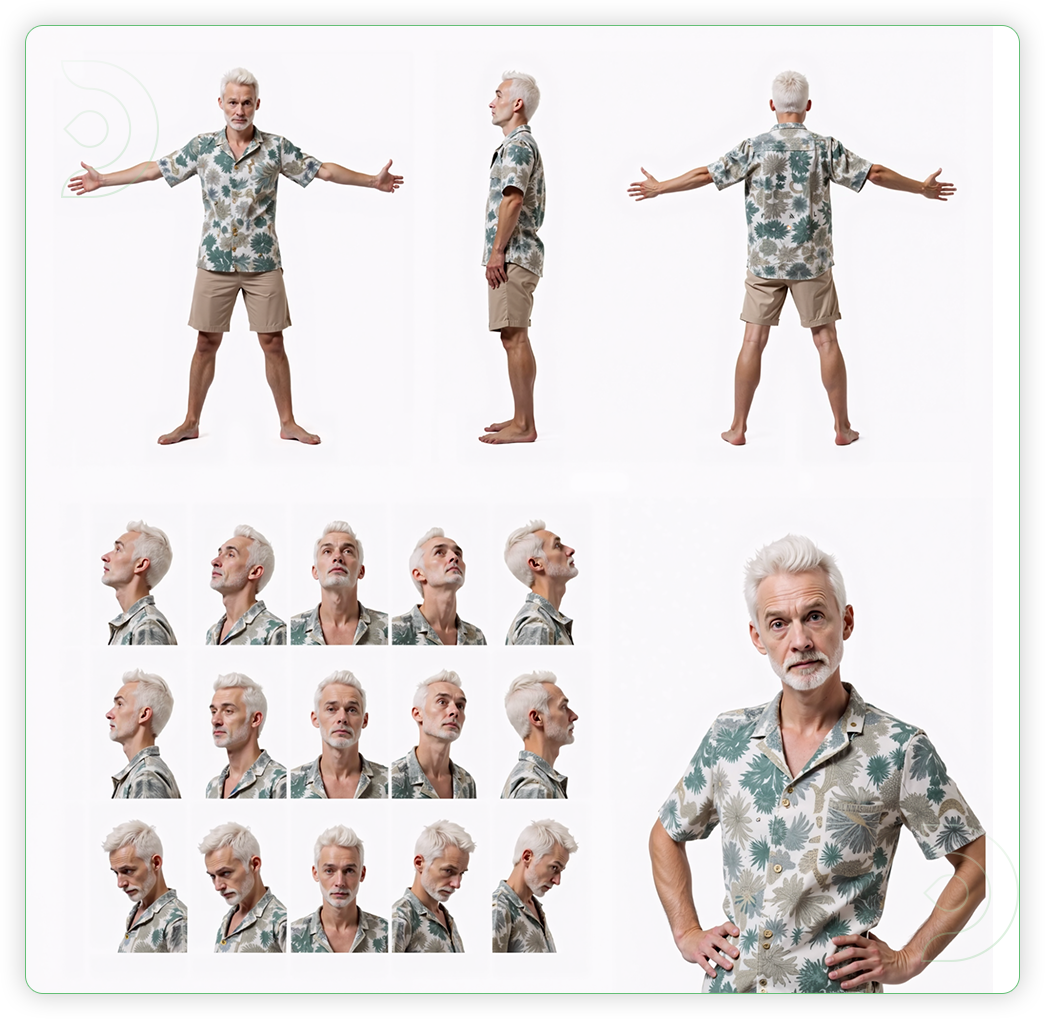

Examples

Prompt Settings

Image Source - Use the provided pose sheet file

Prompt - Write a prompt that describe what character you want to be

Base model - flux1-dev-fp8.safetensors

Clip model - t5xxl_fp8_e4m3fn.safetensors, clip_l.safetensors

VAE model - ae.safetensors or ae.sft

Controlnet Model - flux_shakker_labs_union_pro-fp8_e4m3fn.safetensors

Upscale Model - 4x-ClearRealityV1.pth

Face Model - face_yolov8m.pt

If you’re having issues with installation or slow hardware, you can try any of these workflows on a more powerful GPU in your browser with ThinkDiffusion.

If you enjoy ComfyUI and you want to test out creating awesome animations, then feel free to check out this AnimateDiff tutorial here. Happy creating!

Member discussion