ComfyUI is a popular tool that allow you to create stunning images and animations with Stable Diffusion. However, it is not for the faint hearted and can be somewhat intimidating if you are new to ComfyUI.

In this guide, we are aiming to collect a list of 20 cool ComfyUI workflows that you can simply download and try out for yourself.

| Category | Description | Link |

|---|---|---|

| SDXL Default workflow | A great starting point for using txt2img with SDXL | View Now |

| Img2Img | A great starting point for using img2img with SDXL | View Now |

| Upscaling | How to upscale your images with ComfyUI | View Now |

| Merge 2 images together | Merge 2 images together with this ComfyUI workflow | View Now |

| ControlNet Depth Comfyui workflow | Use ControlNet Depth to enhance your SDXL images | View Now |

| Animation workflow | A great starting point for using AnimateDiff | View Now |

| ControlNet workflow | A great starting point for using ControlNet | View Now |

| Inpainting workflow | A great starting point for using Inpainting | View Now |

| Using LoRA's | A workflow to use LoRA's in your generations | View Now |

| Hidden Faces | A workflow to create hidden faces and text | View Now |

| Wan Image2Video | A workflow to create hidden faces and text | View Now |

| Image2Image Character Spin | A workflow to create 3D rotation of the subject. | View Now |

| Hunyuan Video2Video | A cutting edge video model for video2video generation | View Now |

| Hunyuan 3D | A best video model for generating high quality 3D assets | View Now |

| Consistent character with Flux and ComfyUI | A workflow to create hidden faces and text | View Now |

| Dancing noodles ComfyUI Tutorial | A workflow to create hidden faces and text | View Now |

| Outpainting in ComfyUI | A powerful workflow for extending images while preserving their artistic integrity | View Now |

| HyperSD and Blender | Create a 3D assets in real-time with HyperSD and Blender | View Now |

| Inpainting using Reference Image | A workflow that inpaint anything using reference image | View Now |

| Sketch to Image | A workflow that can turn sketch into an image | View Now |

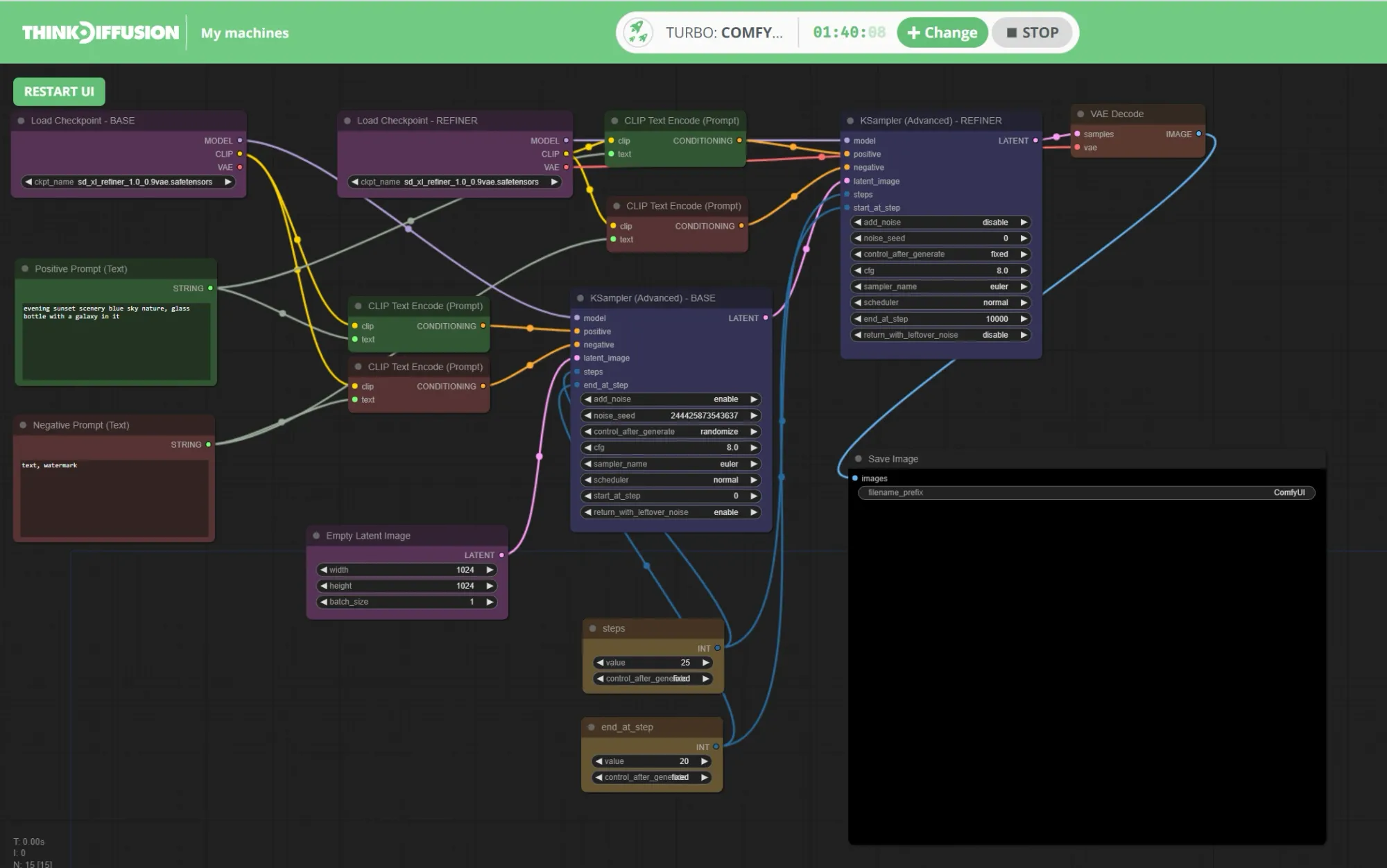

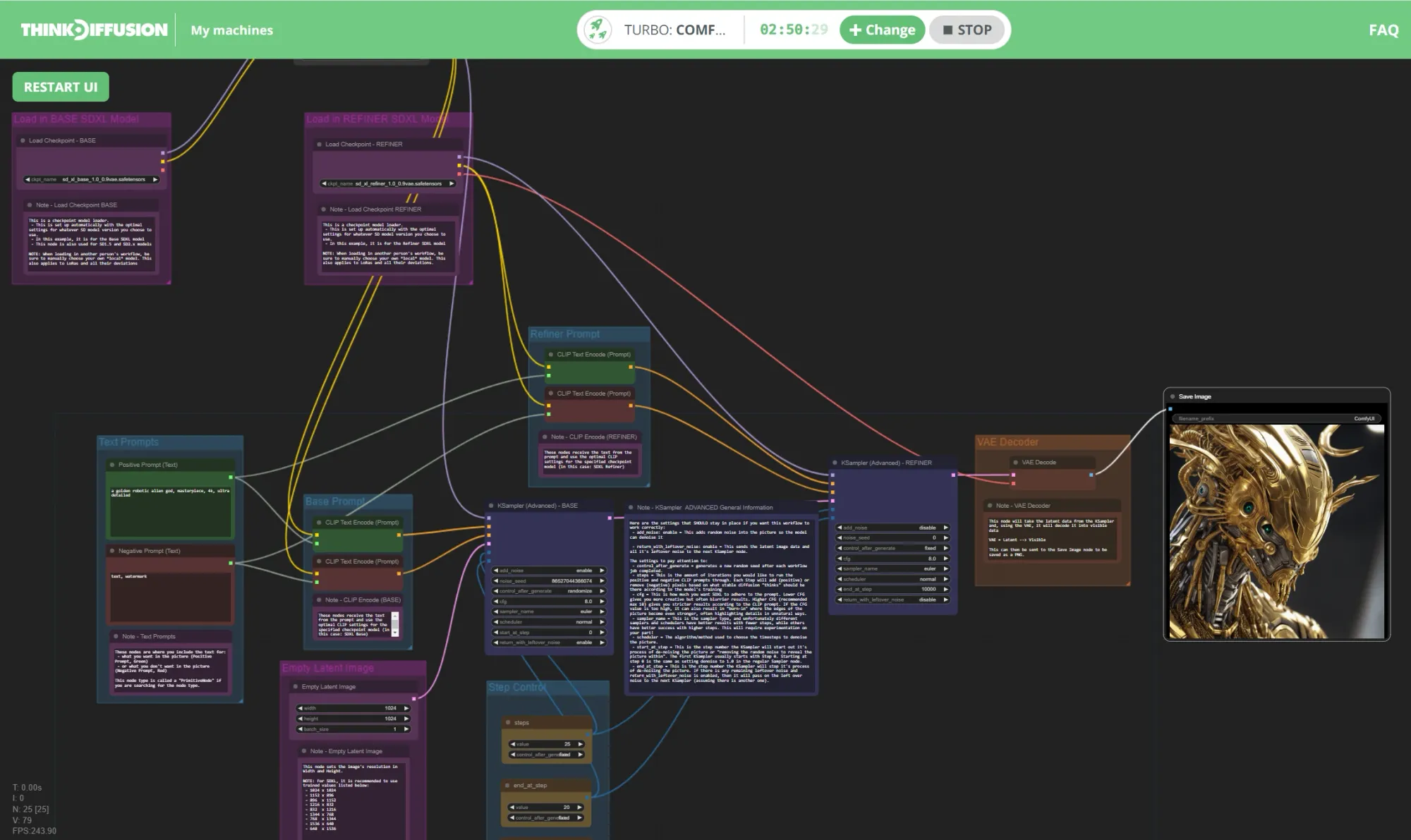

SDXL Default ComfyUI workflow

What it's great for:

This is a great starting point to generate SDXL images at a resolution of 1024 x 1024 with txt2img using the SDXL base model and the SDXL refiner.

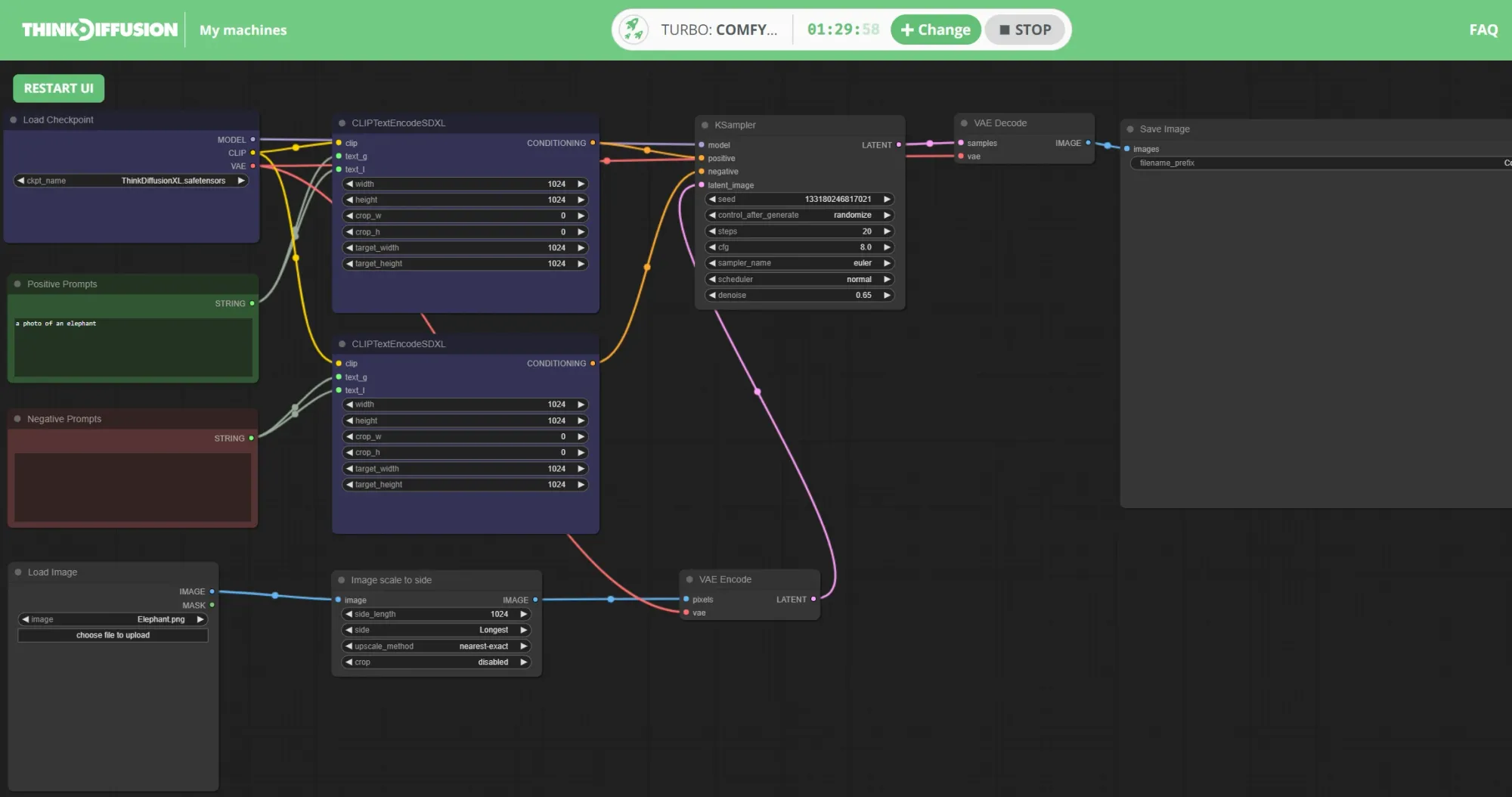

Img2Img ComfyUI workflow

What it's great for:

This is a great starting point for using Img2Img with ComfyUI. Upload any image you want and play with the prompts and denoising strength to change up your original image

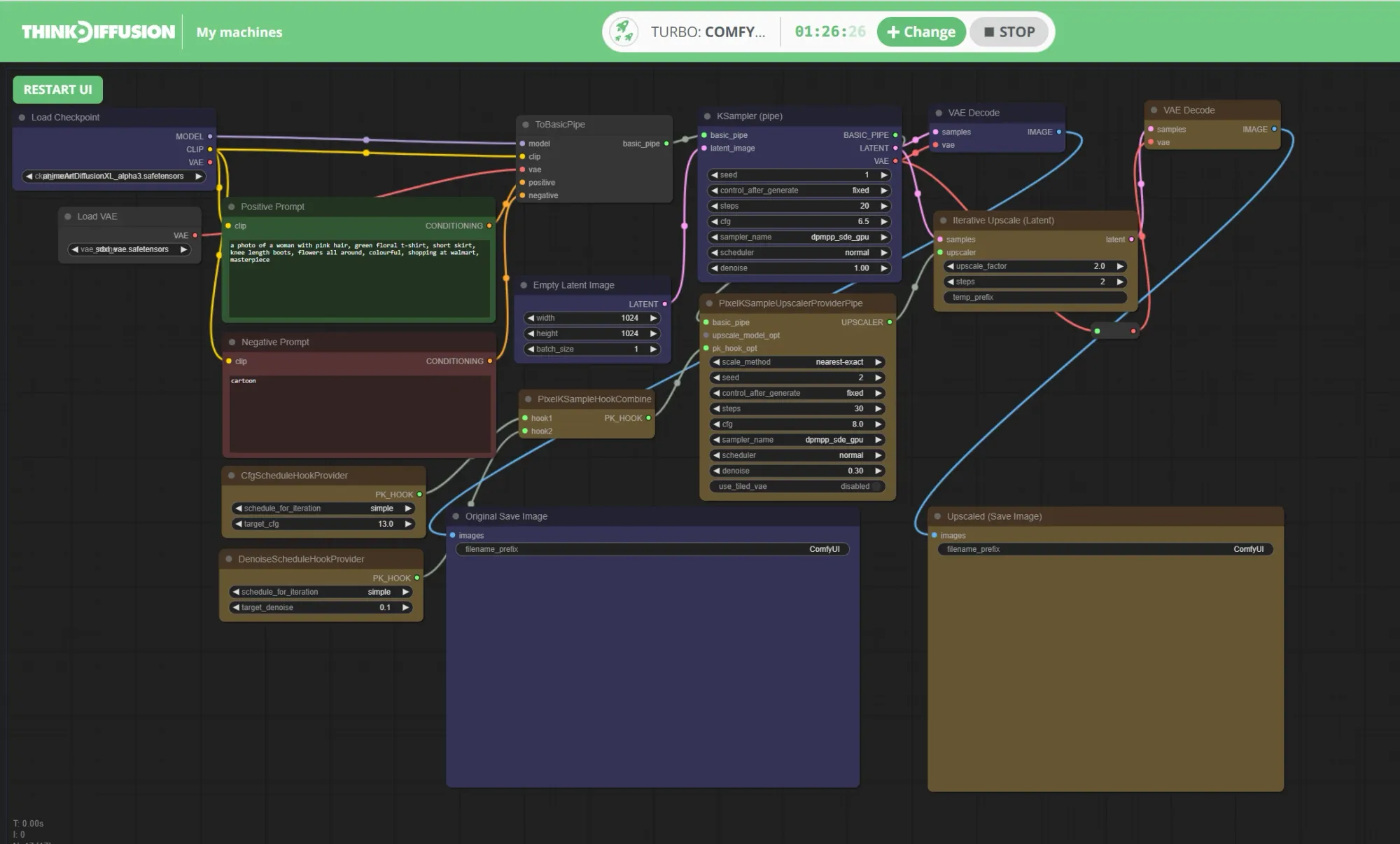

Upscaling ComfyUI workflow

What it's great for:

If you want to upscale your images with ComfyUI then look no further! The above image shows upscaling by 2 times to enhance the quality of your image

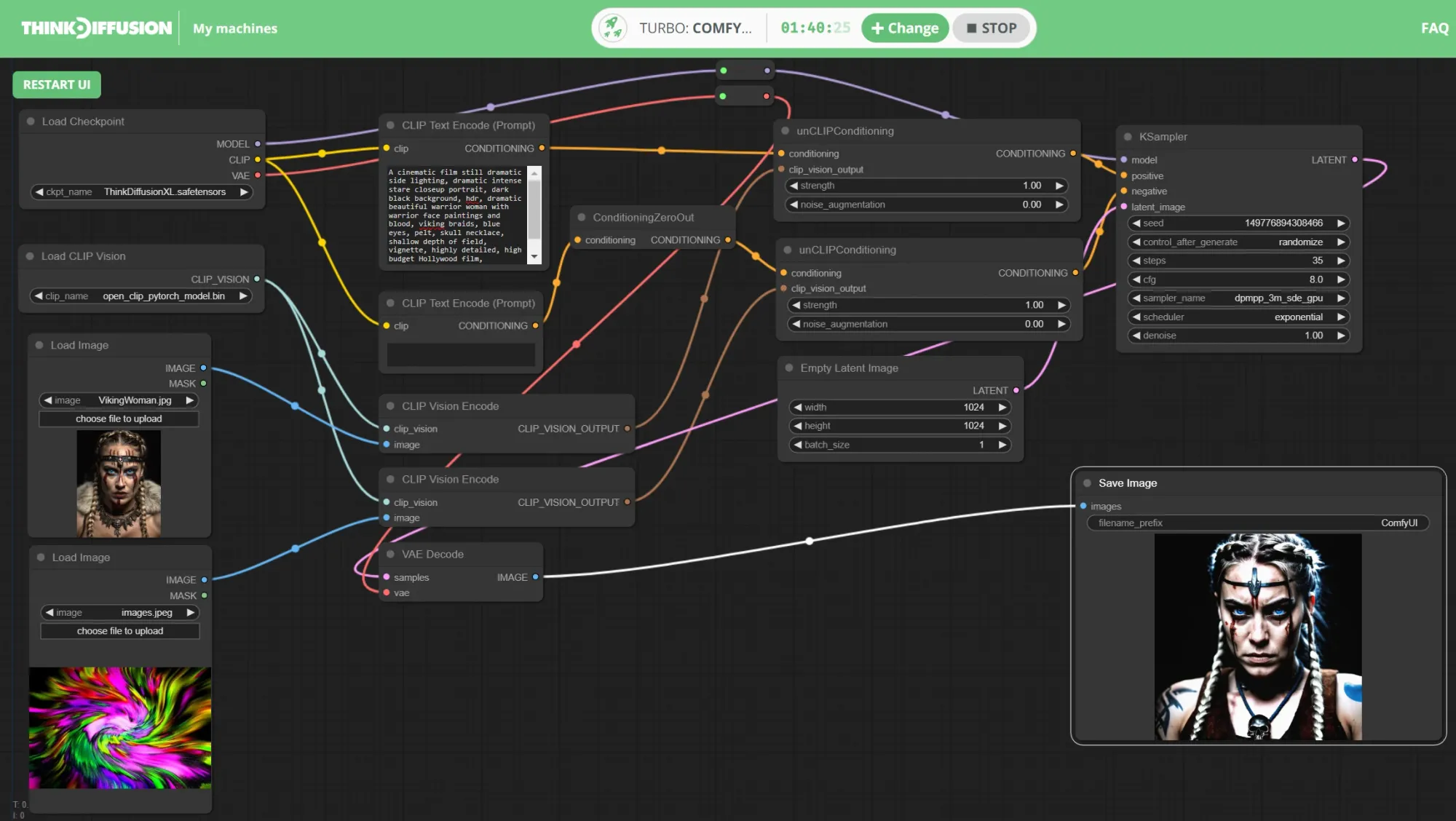

Merging 2 Images together

What it's great for:

Merge 2 images together with this ComfyUI workflow. Simply download the .json file, change your input images and your prompts and you are good to go!

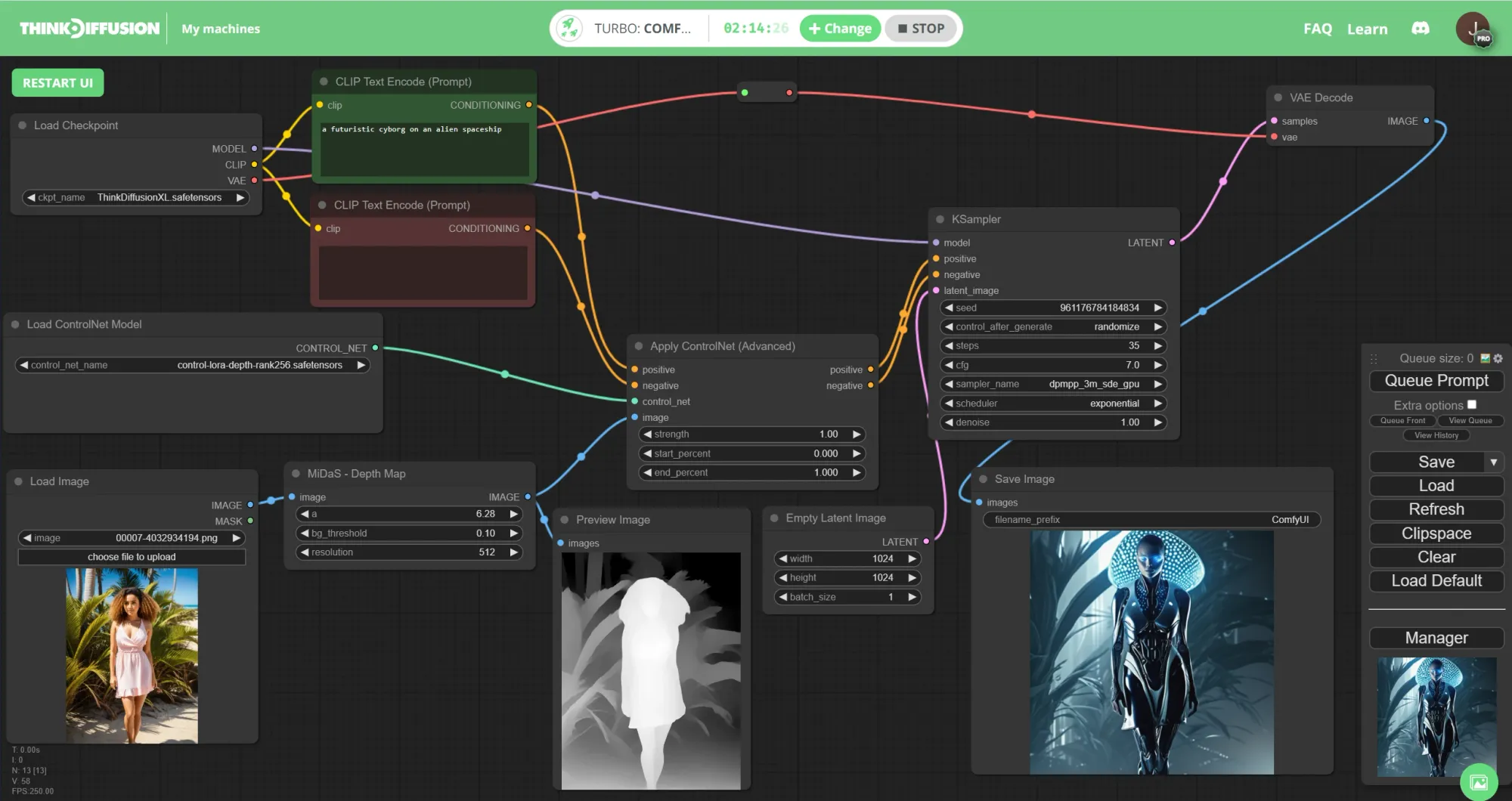

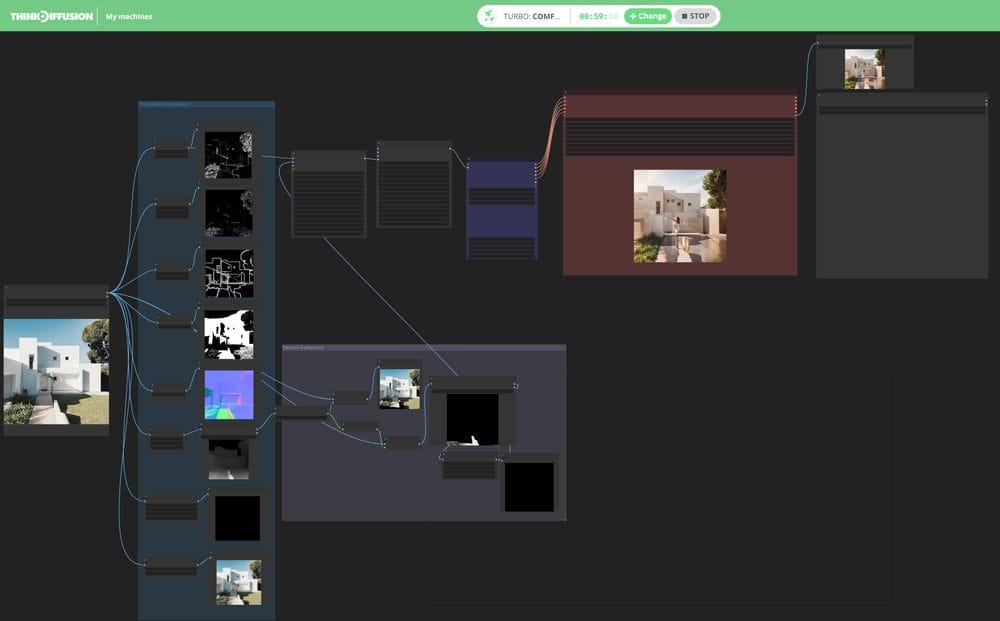

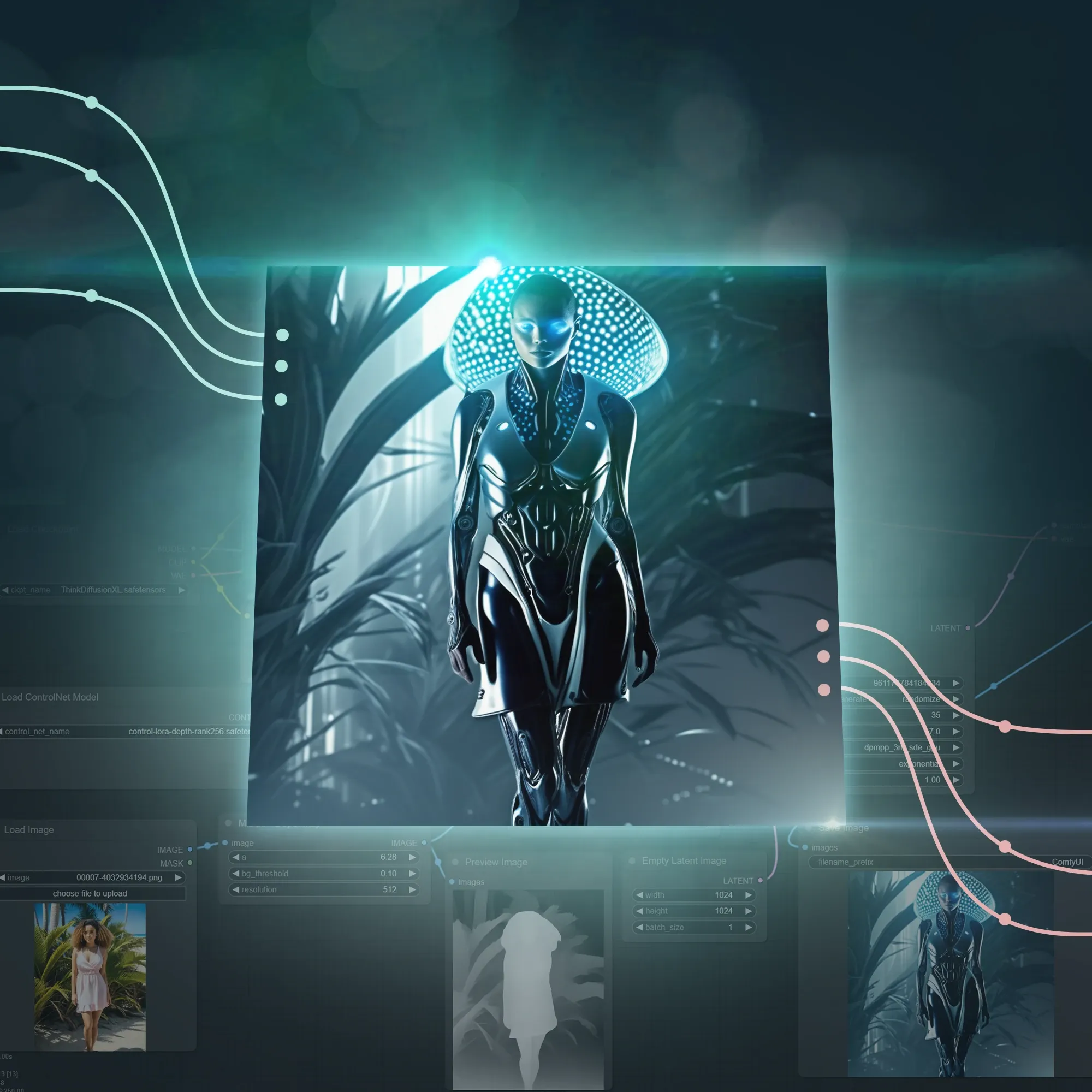

ControlNet Depth ComfyUI workflow

What it's great for:

ControlNet Depth allows us to take an existing image and it will run the pre-processor to generate the outline / depth map of the image. We can then run new prompts to generate a totally new image

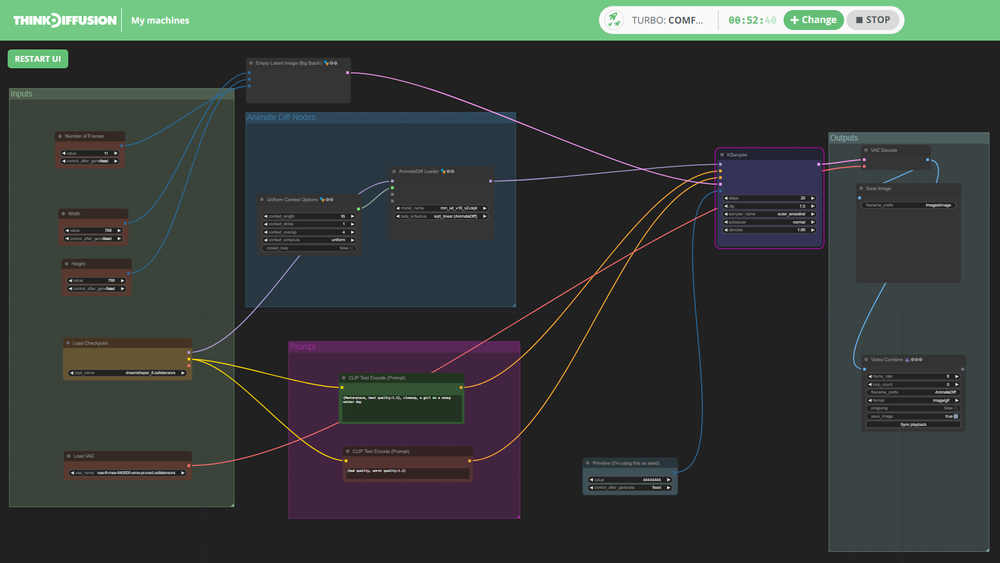

Create animations with AnimateDiff

What it's great for:

AnimateDiff is immensely powerful to create animations within Stable Diffusion and ComfyUI. Check out these workflows to achieve fantastic looking animations with ease!

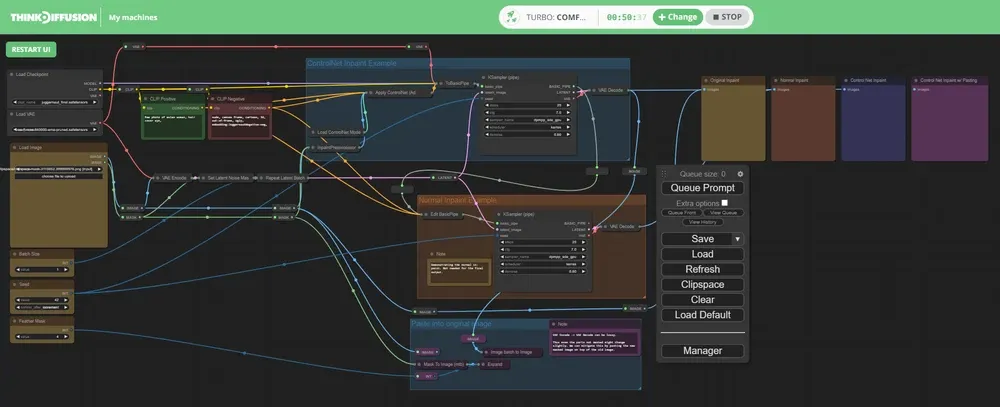

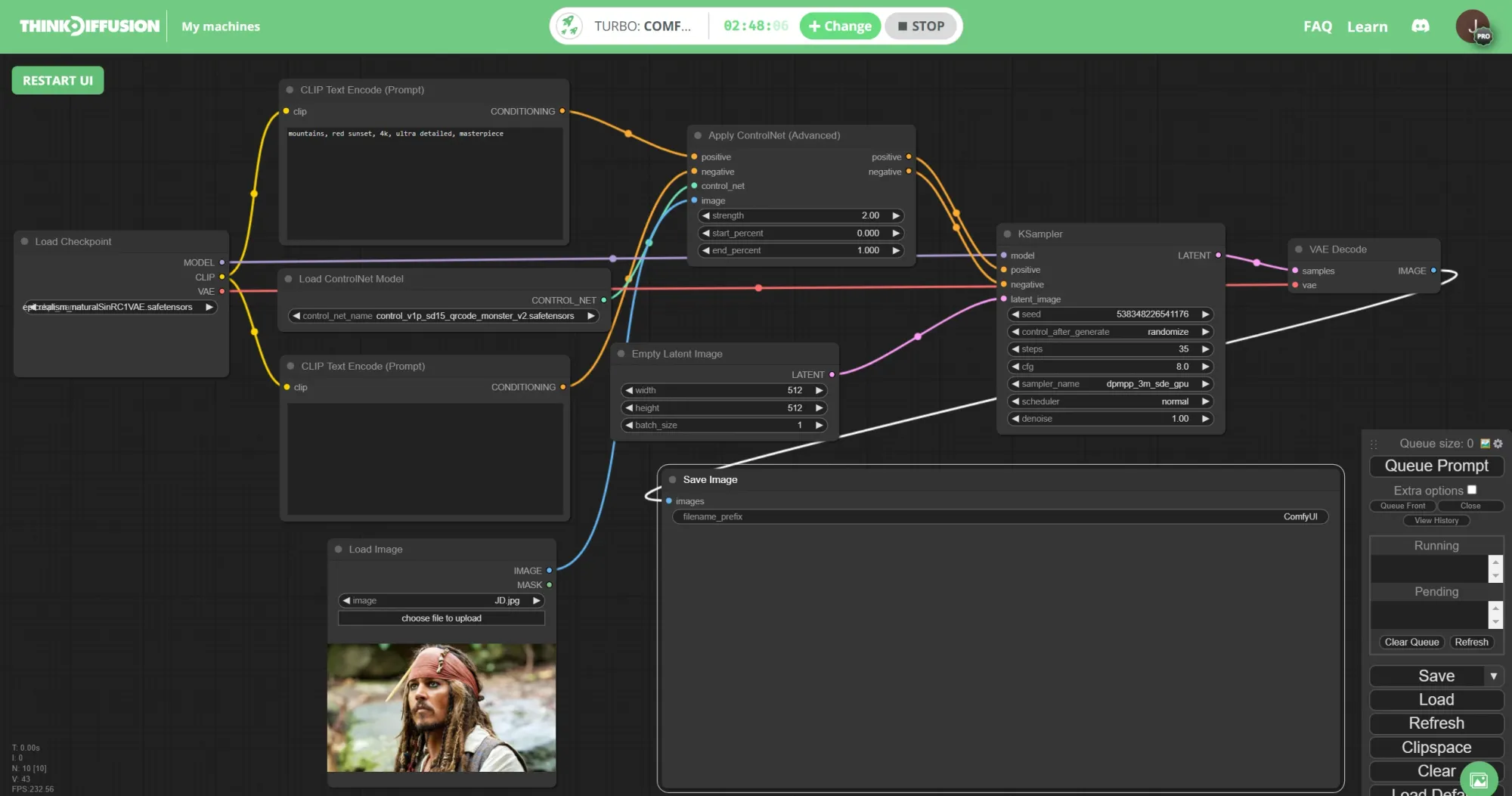

ControlNet Workflow

What it's great for:

ControlNet is probably the most popular feature of Stable Diffusion and with this workflow you'll be able to get started and create fantastic art with the full control you've long searched for.

Select an image in the left-most node and choose which preprocessor and ControlNet model you want from the top Multi-ControlNet Stack node.

Inpainting workflow

What it's great for:

Once you've achieved the artwork you're looking for, it's time to delve deeper and use inpainting, where you can customize an already created image. Paint inside your image and change parts of it, to suit your desired result!

Using LoRA's

What it's great for:

This SDXL workflow allows you to create images with the SDXL base model and the refiner and adds a LoRA to the image generation.

Hidden Faces

What it's great for:

This ComfyUI workflow allows us to create hidden faces or text within our images. It allows for some really creative content!

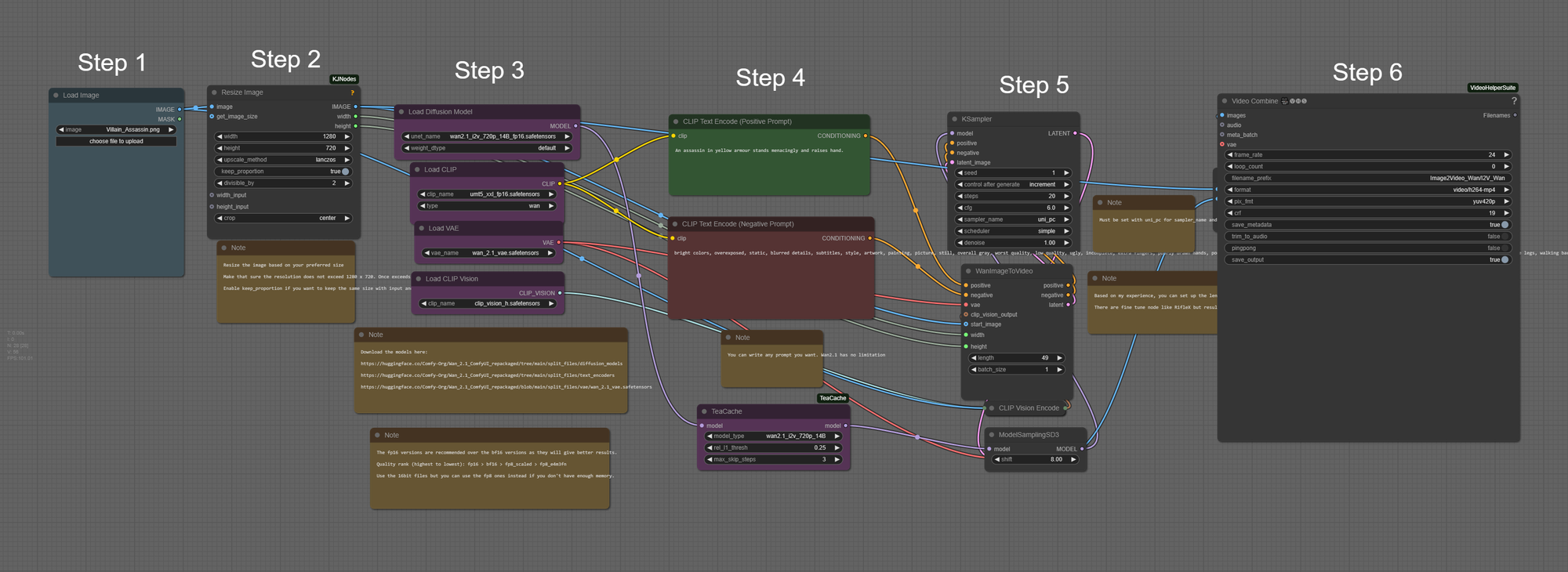

Wan Image2Video

What it's great for:

Wan 2.1’s image-to-video (I2V) tool is great for turning still pictures into moving videos. It uses advanced technology to create smooth, detailed, and realistic animations. It’s useful for many industries, like creating social media content, educational videos, movie backgrounds or effects, and advertising animations. Wan 2.1 is a powerful yet easy-to-use tool that helps creators make high-quality videos quickly and without spending too much money or needing fancy equipment.

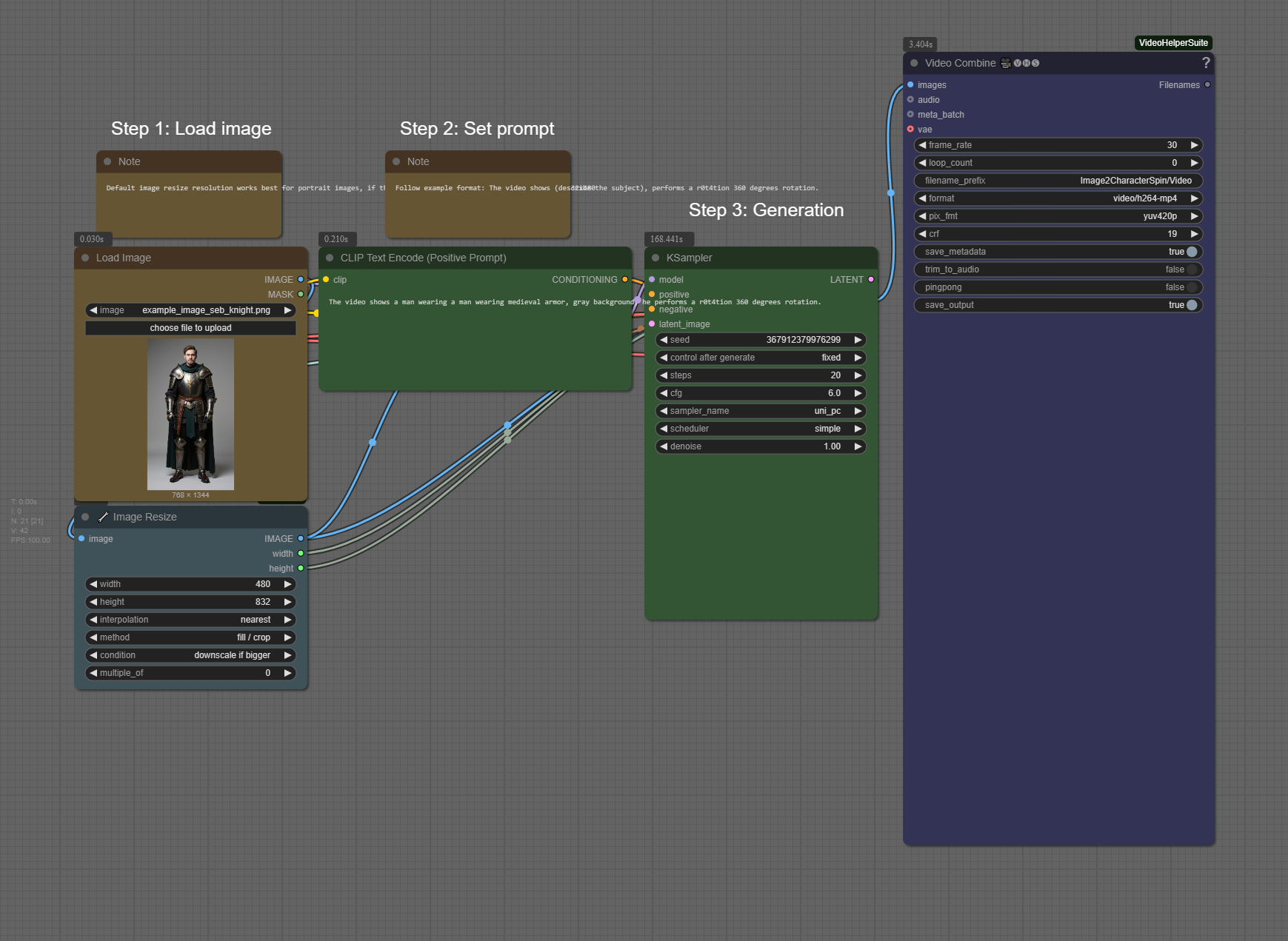

Image2Image Character Spin

What it's great for:

This workflow uses a LoRAs based from Remade-AI repo, uses a lightweight LoRA that make it easy to create unique and creative videos without needing a lot of computer power. They are also very versatile, letting you make videos in different styles, such as Pixar animations or realistic tornado scenes, making them useful for projects in storytelling, advertising, education, and more. Since LoRAs don’t require retraining the entire model, they save time and resources while still producing excellent results.

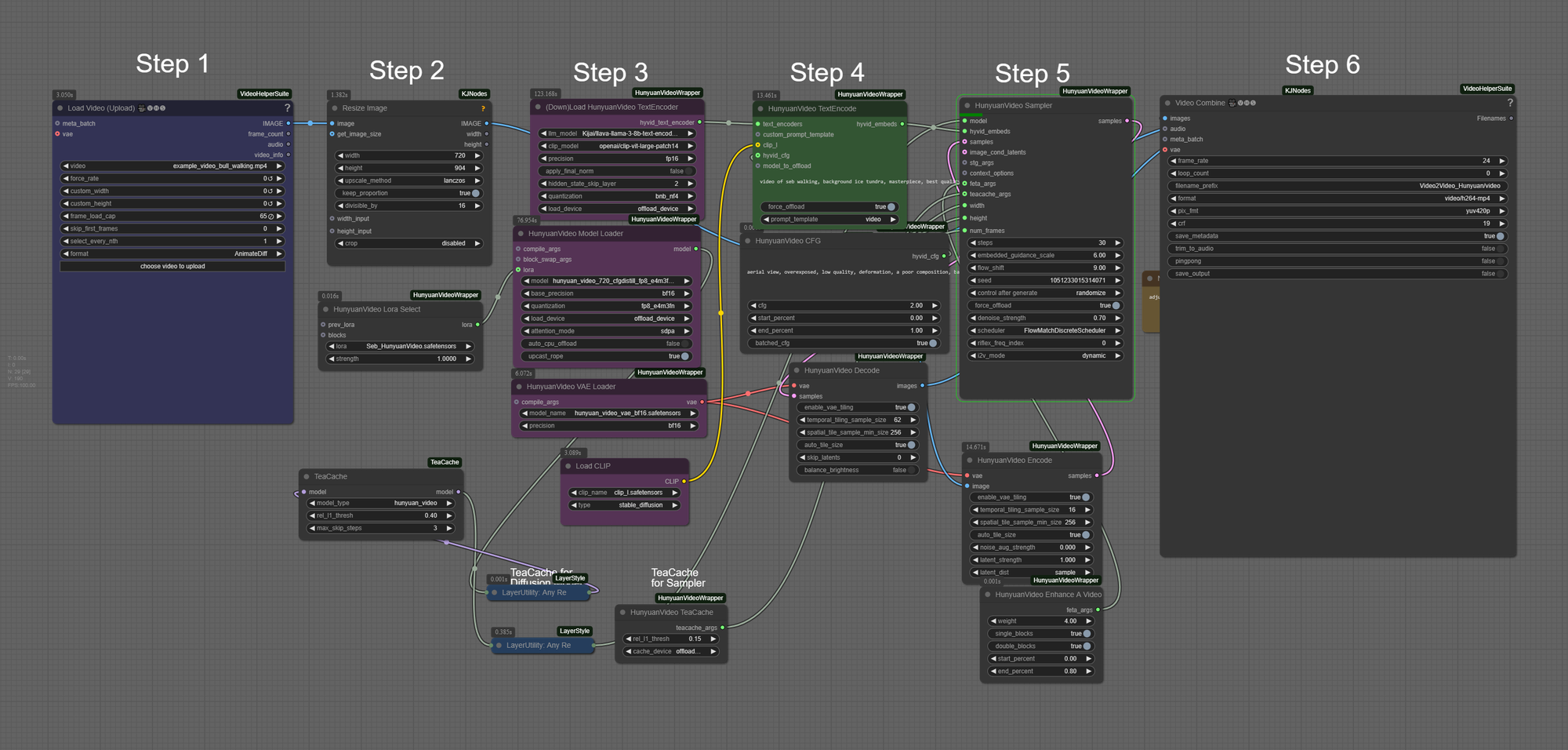

Hunyuan Video2Video

What it's great for:

This technology is part of the Hunyuan Video framework and is designed to reimagine video content by combining the motion and structure of a source video with user-defined text prompts. The system uses advanced architecture, such as a large-scale 13 billion parameter model and 3D VAE, to deliver high-quality, stable, and diverse outputs. One of its key features is the ability to seamlessly incorporate new styles, elements, and effects into videos while maintaining the original motion flow.

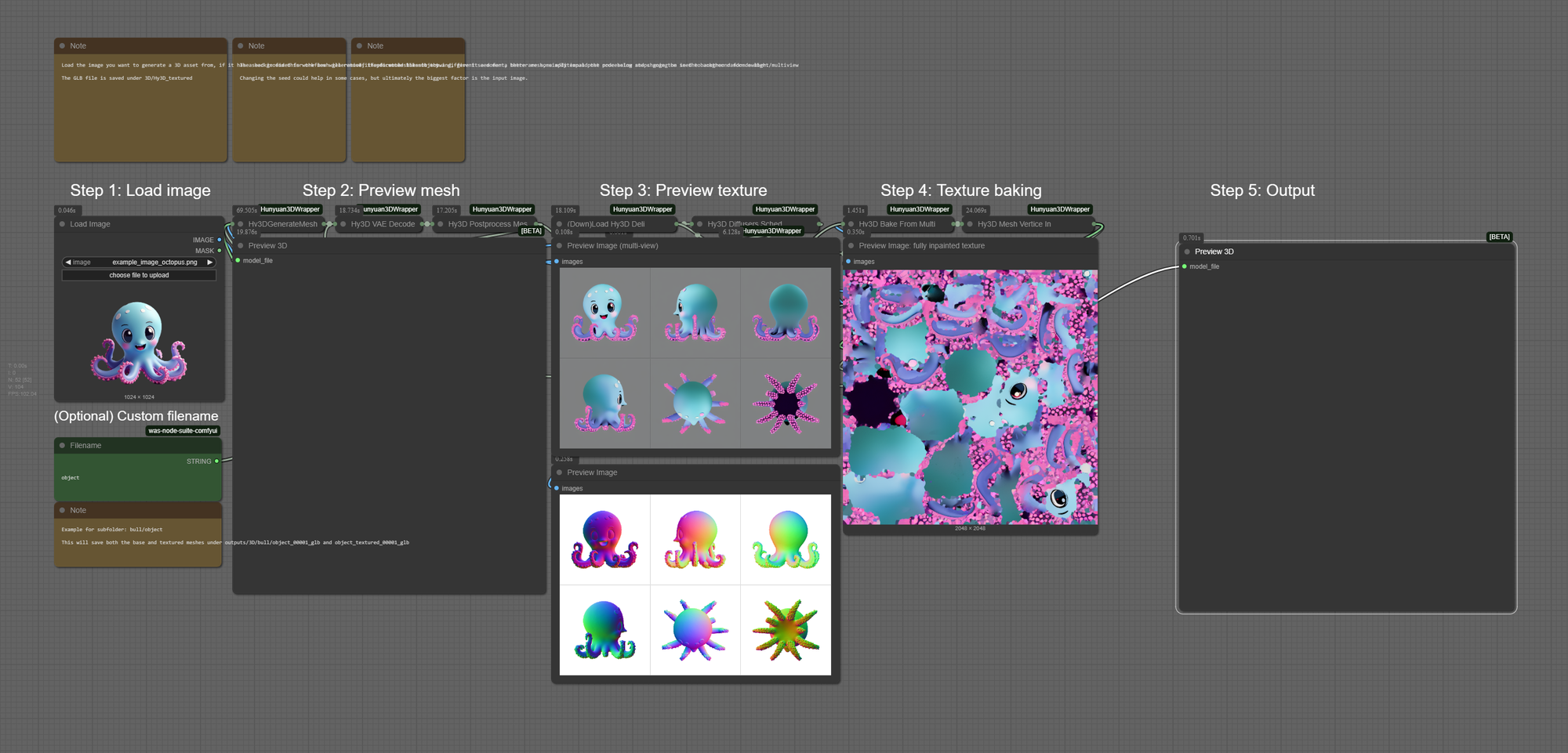

Hunyuan 3D

What it's great for:

This workflow is highly versatile, allowing users to create diverse 3D assets such as objects, characters, and environments from simple text or image inputs. It supports rapid generation, producing models in as little as 10–25 seconds, while maintaining high fidelity in both geometric details and textures. The system is a user-friendly platform for editing, animating, and exporting 3D assets, making it accessible to both professionals and beginners. It is ideal for industries such as gaming, animation, virtual reality (VR), e-commerce, and education.

Consistent character with Flux and ComfyUI

What it's great for:

Using a workflow to create consistent characters is important because it ensures that the visual appearance and personality of a character remain uniform across different images, scenes, or media. This consistency helps audiences easily recognize and connect with the character, building trust and familiarity, which are essential for storytelling, branding, and creative projects. It make it easier to achieve artistic freedom while maintaining consistency in character design across diverse applications like storytelling, animation, gaming, and branding.

Dancing noodles ComfyUI Tutorial

What it's great for:

It is creative workflow that transforms videos into unique, stylized animations by applying motion and artistic effects to objects or characters. This technique is popular for its ability to animate simple elements, like noodles, in visually engaging ways, making them appear as if they are dancing. It is particularly useful for creating fun and engaging animations for social media content, marketing campaigns, or creative projects. Its combination of ease of use, flexibility, and high-quality outputs makes it a powerful tool for exploring innovative video transformations.

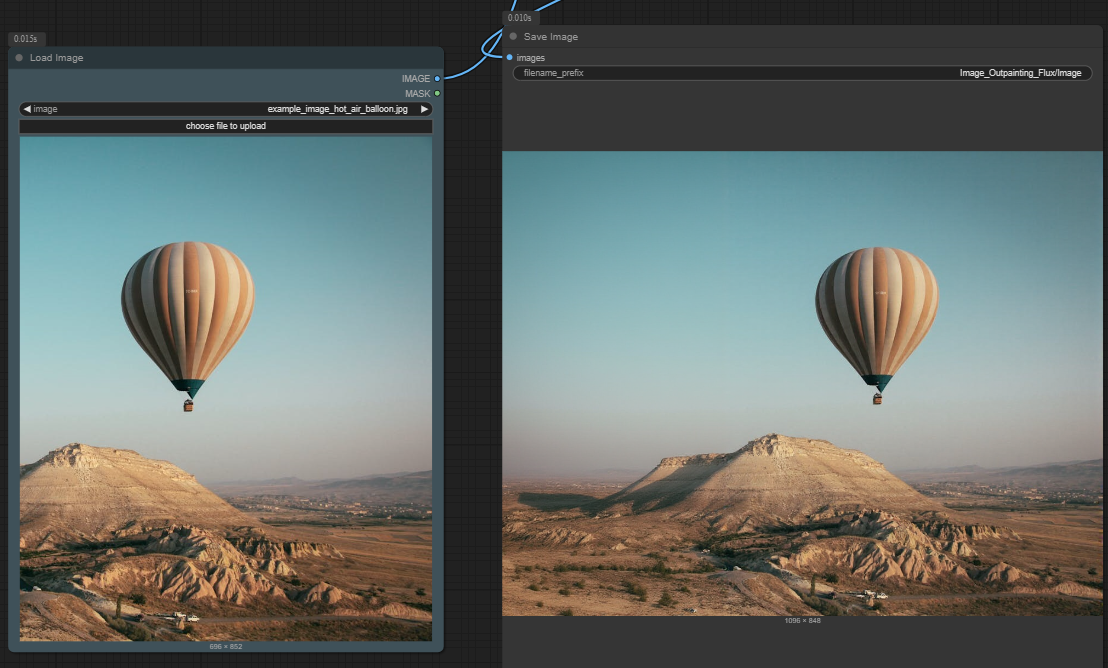

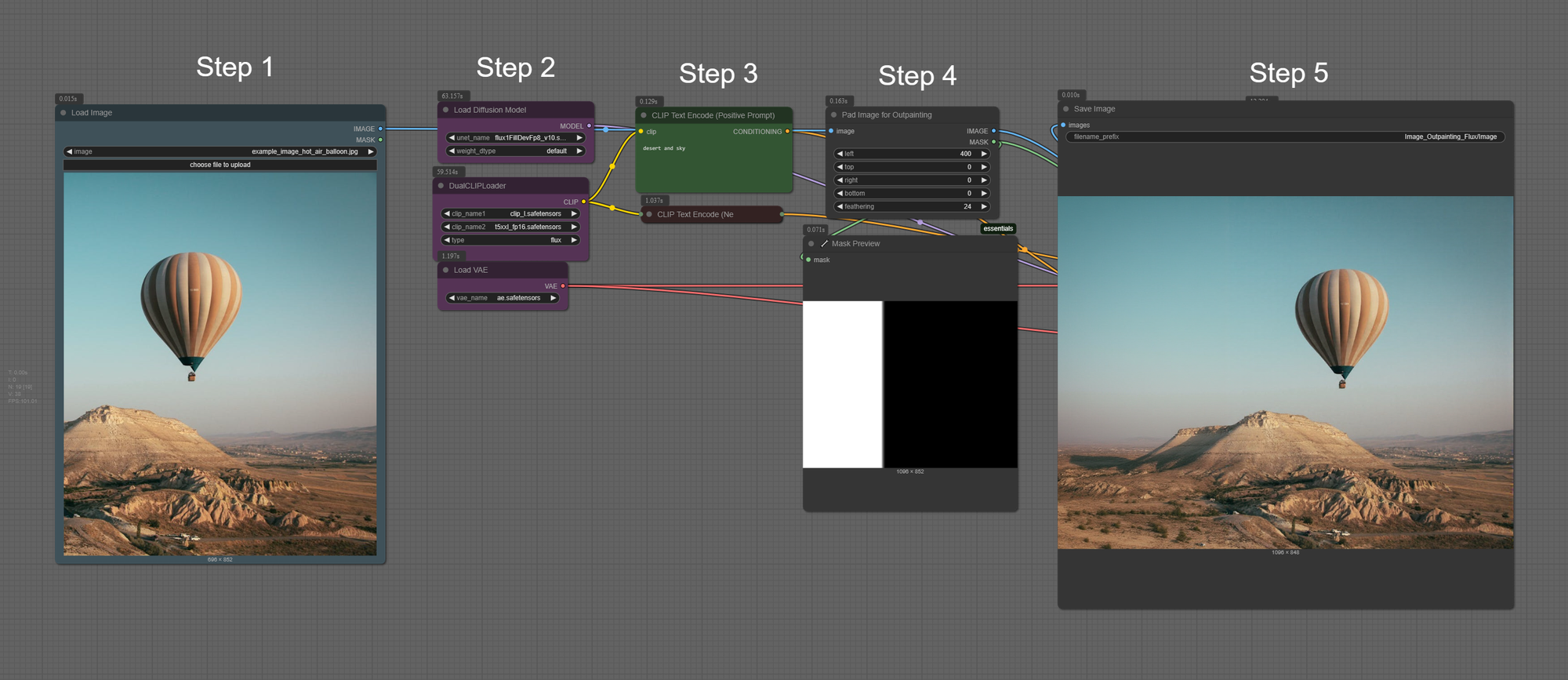

Outpainting in ComfyUI

What it's great for:

It is used to extend the boundaries of an image by generating new content that blends seamlessly with the original. Outpainting is particularly useful for expanding the field of view, adjusting the aspect ratio, or adding new elements to enhance the composition of an image. It is a powerful tool for artists and creators looking to extend or modify images while maintaining high-quality results.

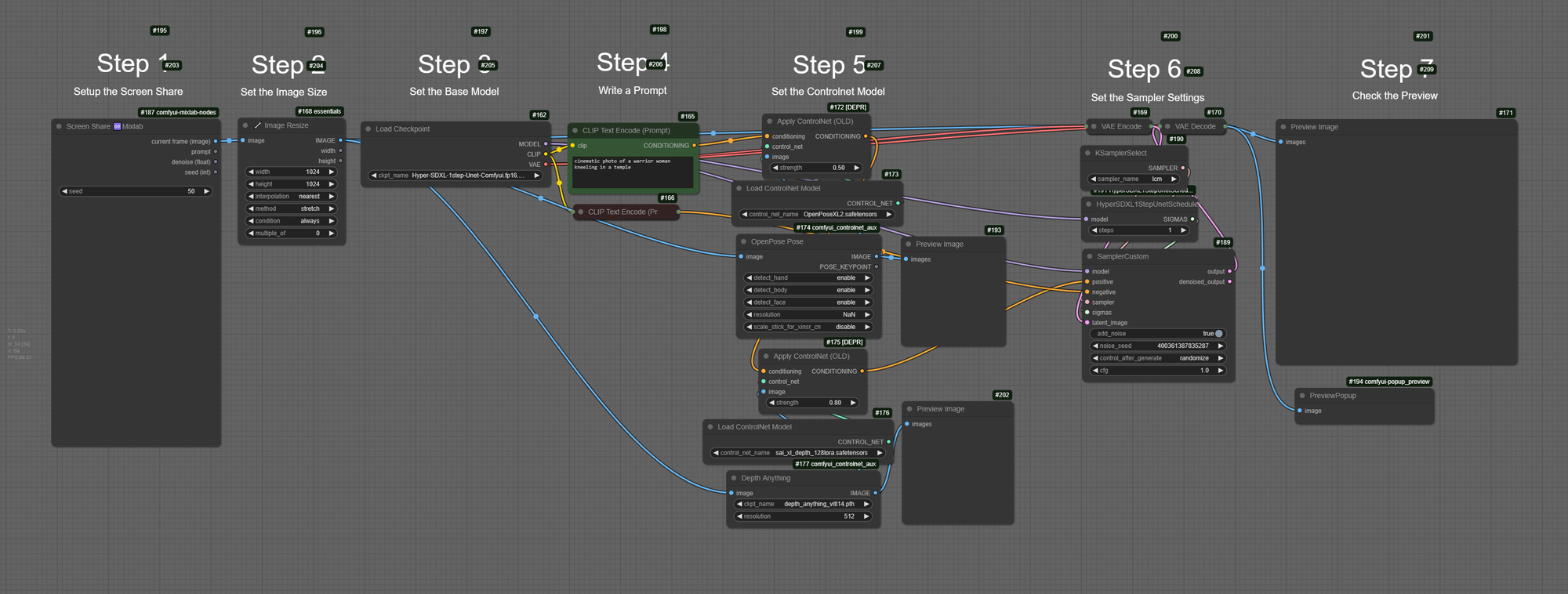

HyperSD and Blender

What it's great for:

HyperSD and Blender in ComfyUI is particularly good to use because it offers flexibility, speed, and high-quality outputs while being accessible to users with different skill levels. Whether you're an artist looking to enhance your 3D models or a designer creating animations with AI assistance, this workflow provides a streamlined solution for producing professional-grade results efficiently.

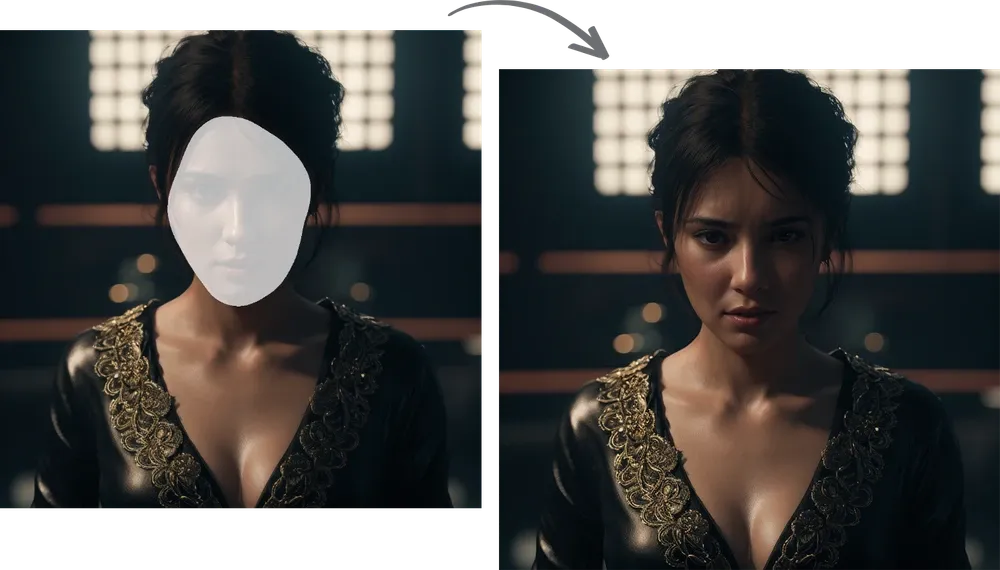

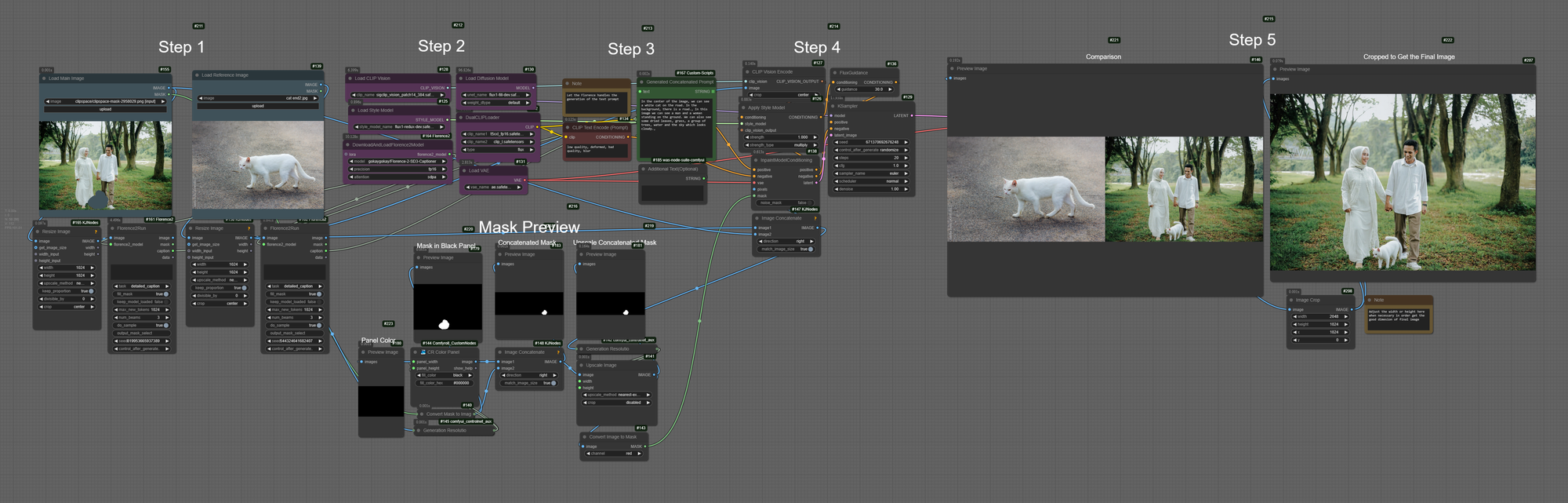

Inpainting using Reference Image

What it's great for:

It allows users to modify specific parts of an image by leveraging content from another image as a guide. This approach is good to use because it provides precise control over the inpainting process and ensures stylistic and contextual consistency. This makes it ideal for creative tasks such as editing portraits, restoring damaged images, or creating new variations of artwork.

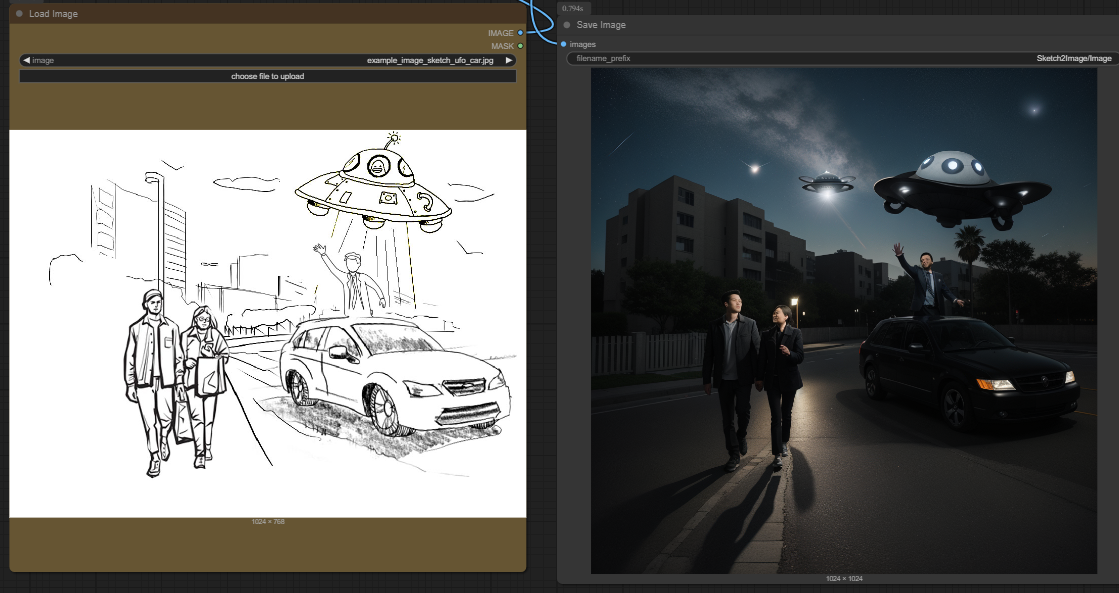

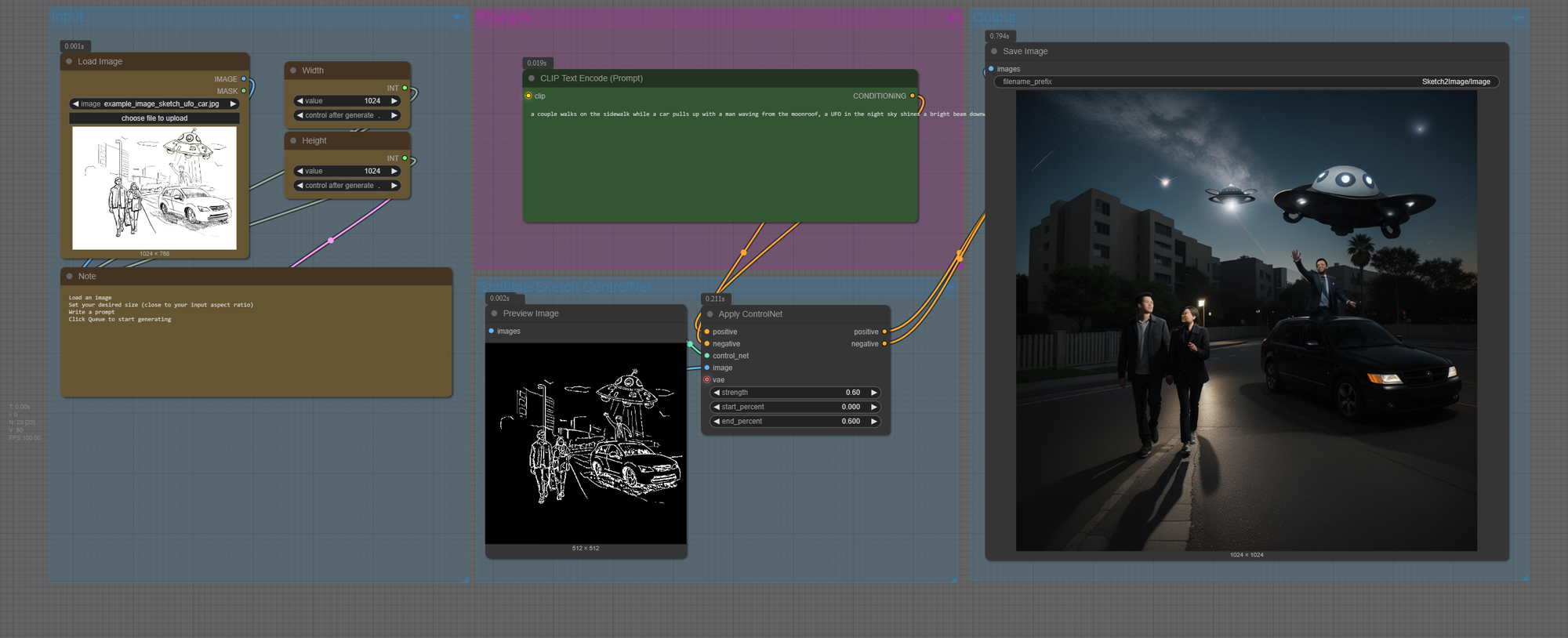

Sketch to Image

What it's great for:

This process is particularly useful for artists, designers, and creative enthusiasts who want to bring their ideas to life without needing advanced drawing skills. The workflow takes a sketch as input, processes it using AI to detect edges and structures, and then applies prompts to generate detailed and realistic images. Users can also include style images or descriptive text to further customize the output.

Pretty Comfy, Right?

Any of our workflows including the above can run on a local version of SD but if you’re having issues with installation or slow hardware, you can try any of these workflows on a more powerful GPU in your browser with ThinkDiffusion.

If you’d like a way to make someone un-comfy perhaps by making the lips of their face move with different dialogue, check out my post using Wav2Lip.

Most importantly, y'all have fun out there getting Comfy with Stable Diffusion, and let us know what you're making!

Member discussion